From information to knowledge: the what and whence of issue based information systems

Issue Based Information System (IBIS) is a notation invented by Horst Rittel and Werner Kunz in the early 1970s. IBIS is best known for its use in dialogue mapping, a collaborative approach to tackling wicked problems (i.e. contentious issues) in organisations. It has a range of other applications as well – capturing knowledge is a good example, and I’ll have much more to say about that later in this post.

Over the last five years or so, I have written a fair bit on IBIS on this blog and in a book that I co-authored with the dialogue mapping expert, Paul Culmsee. The present post reprises an article I wrote five years ago on the “what” and “whence” of the notation: its practical aspects – notation, grammar etc -, as well as its origins, advantages and limitations. My motivations for revisiting the piece are to revise and update the original discussion and, more important, to cover some recent developments in IBIS technology that open up interesting possibilities in the area of knowledge management.

To appreciate the power of the IBIS, it is best to begin by understanding the context in which the notation was invented. I’ll therefore start with a discussion of the origins of the notation followed by an introduction to it. Finally, I’ll cover its development through the last 40 odd years, focusing on the recent developments that I mentioned above.

Wicked origins

A good place to start is where it all started. IBIS was first described in a paper entitled, Issues as elements of Information Systems; written by Horst Rittel (the man who coined the term wicked problem) and Werner Kunz in July 1970. They state the intent behind IBIS in the very first line of the abstract of their paper:

Issue-Based Information Systems (IBIS) are meant to support coordination and planning of political decision processes. IBIS guides the identification, structuring, and settling of issues raised by problem-solving groups, and provides information pertinent to the discourse.

Rittel’s preoccupation was the area of public policy and planning – which is also the context in which he defined the term wicked problem originally. Given the above background it is no surprise that Rittel and Kunz foresaw IBIS to be the:

…type of information system meant to support the work of cooperatives like governmental or administrative agencies or committees, planning groups, etc., that are confronted with a problem complex in order to arrive at a plan for decision…

The problems tackled by such cooperatives are paradigm-defining examples of wicked problems. From the start, then, IBIS was intended as a tool to facilitate a collaborative approach to solving…or better, managing a wicked problem by helping develop a shared perspective on it.

A brief introduction to IBIS

The IBIS notation consists of the following three elements:

- Issues(or questions): these are issues that are being debated. Typically, issues are framed as questions on the lines of “What should we do about X?” where X is the issue that is of interest to a group. For example, in the case of a group of executives, X might be rapidly changing market condition whereas in the case of a group of IT people, X could be an ageing system that is hard to replace.

- Ideas(or positions): these are responses to questions. For example, one of the ideas of offered by the IT group above might be to replace the said system with a newer one. Typically the whole set of ideas that respond to an issue in a discussion represents the spectrum of participant perspectives on the issue.

- Arguments: these can be Pros (arguments for) or Cons (arguments against) an issue. The complete set of arguments that respond to an idea represents the multiplicity of viewpoints on it.

Compendium is a freeware tool that can be used to create IBIS maps– it can be downloaded here.

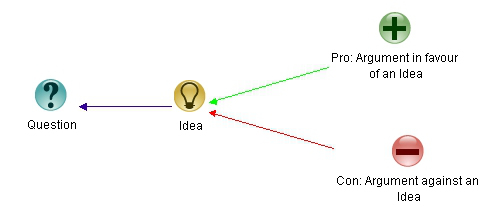

In Compendium, the IBIS elements described above are represented as nodes as shown in Figure 1: issues are represented by blue-green question marks; positions by yellow light bulbs; pros by green + signs and cons by red – signs. Compendium supports a few other node types, but these are not part of the core IBIS notation. Nodes can be linked only in ways specified by the IBIS grammar as I discuss next.

The IBIS grammar can be summarized in three simple rules:

- Issues can be raised anew or can arise from other issues, positions or arguments. In other words, any IBIS element can be questioned. In Compendium notation: a question node can connect to any other IBIS node.

- Ideas can only respond to questions– i.e. in Compendium “light bulb” nodes can only link to question nodes. The arrow pointing from the idea to the question depicts the “responds to” relationship.

- Arguments can only be associated with ideas– i.e. in Compendium “+” and “–“ nodes can only link to “light bulb” nodes (with arrows pointing to the latter)

The legal links are summarized in Figure 2 below.

Yes, it’s as simple as that.

The rules are best illustrated by example- follow the links below to see some illustrations of IBIS in action:

- See this postfor a simple example of dialogue mapping.

- See this postor this one for examples of argument visualisation. (Note: using IBIS to map out the structure of written arguments is called issue mapping.

- See this one for an example Paul did with his children. This example also features in our book. that made an appearance in our book.

Now that we know how IBIS works and have seen a few examples of it in action, it’s time to trace the history of the notation from its early days the present.

Operation of early systems

When Rittel and Kunz wrote their paper, there were three IBIS-type systems in operation: two in government agencies (in the US, one presumes) and one in a university environment (quite possibly Berkeley, where Rittel worked). Although it seems quaint and old-fashioned now, it is no surprise that these were manual, paper-based systems; the effort and expense involved in computerizing such systems in the early 70s would have been prohibitive and the pay-off questionable.

The Rittel-Kunz paper introduced earlier also offers a short description of how these early IBIS systems operated:

An initially unstructured problem area or topic denotes the task named by a “trigger phrase” (“Urban Renewal in Baltimore,” “The War,” “Tax Reform”). About this topic and its subtopics a discourse develops. Issues are brought up and disputed because different positions (Rittel’s word for ideas or responses) are assumed. Arguments are constructed in defense of or against the different positions until the issue is settled by convincing the opponents or decided by a formal decision procedure. Frequently questions of fact are directed to experts or fed into a documentation system. Answers obtained can be questioned and turned into issues. Through this counterplay of questioning and arguing, the participants form and exert their judgments incessantly, developing more structured pictures of the problem and its solutions. It is not possible to separate “understanding the problem” as a phase from “information” or “solution” since every formulation of the problem is also a statement about a potential solution.

Even today, forty years later, this is an excellent description of how IBIS is used to facilitate a common understanding of complex problems. Moreover, the process of reaching a shared understanding (whether using IBIS or not) is one of the key ways in which knowledge is created within organizations. To foreshadow a point I will elaborate on later, using IBIS to capture the key issues, ideas and arguments, and the connections between them, results in a navigable map of the knowledge that is generated in a discussion.

Fast forward a couple decades (and more!)

In a paper published in 1988 entitled, gIBIS: A hypertext tool for exploratory policy discussion, Conklin and Begeman describe a prototype of a graphical, hypertext-based IBIS-type system (called gIBIS) and its use in capturing design rationale (yes, despite the title of the paper, it is more about capturing design rationale than policy discussions). The development of gIBIS represents a key step between the original Rittel-Kunz version of IBIS and its more recent version as implemented in Compendium. Amongst other things, IBIS was finally off paper and on to disk, opening up a world of new possibilities.

gIBIS aimed to offer users:

- The ability to capture design rationale – the options discussed (including the ones rejected) and the discussion around the pros and cons of each.

- A platform for promoting computer-mediated collaborativedesign work – ideally in situations where participants were located at sites remote from each other.

- The ability to store a large amount of information and to be able to navigate through it in an intuitive way.

The gIBIS prototype proved successful enough to catalyse the development of Questmap, a commercially available software tool that supported IBIS. In a recent conversation Jeff Conklin mentioned to me that Questmap was one of the earliest Windows-based groupware tools available on the market…and it won a best-of-show award in that category. It is interesting to note that in contrast to Questmap (which no longer exists), Compendium is a single-user, desktop software.

The primary application of Questmap was in the area of sensemaking which is all about helping groups reach a collective understanding of complex situations that might otherwise lead them into tense or adversarial conditions. Indeed, that is precisely how Rittel and Kunz intended IBIS to be used. The key advantage offered by computerized IBIS systems was that one could map dialogues in real-time, with the map representing the points raised in the conversation along with their logical connections. This proved to be a big step forward in the use of IBIS to help groups achieve a shared understanding of complex issues.

That said, although there were some notable early successes in the real-time use of IBIS in industry environments (see this paper, for example), these were not accompanied by widespread adoption of the technique. It is worth exploring the reasons for this briefly.

The tacitness of IBIS mastery

The reasons for the lack of traction of IBIS-type techniques for real-time knowledge capture are discussed in a paper by Shum et. al. entitled, Hypermedia Support for Argumentation-Based Rationale: 15 Years on from gIBIS and QOC. The reasons they give are:

- For acceptance, any system must offer immediate value to the person who is using it. Quoting from the paper, “No designer can be expected to altruistically enter quality design rationale solely for the possible benefit of a possibly unknown person at an unknown point in the future for an unknown task. There must be immediate value.” Such immediate value is not obvious to novice users of IBIS-type systems.

- There is some effort involved in gaining fluency in the use of IBIS-based software tools. It is only after this that users can gain an appreciation of the value of such tools in overcoming the limitations of mapping design arguments on paper, whiteboards etc.

While the rules of IBIS are simple to grasp, the intellectual effort – or cognitive overhead in using IBIS in real time involves:

- Teasing out issues, ideas and arguments from the dialogue.

- Classifying points raised into issues, ideas and arguments.

- Naming (or describing) the point succinctly.

- Relating (or linking) the point to the existing map (or anticipating how it will fit in later)

- Developing a sense for conversational patterns.

Expertise in these skills can only be developed through sustained practice, so it is no surprise that beginners find it hard to use IBIS to map dialogues. Indeed, the use of IBIS for real-time conversation mapping is a tacit skill, much like riding a bike or swimming – it can only be mastered by doing.

Making sense through IBIS

Despite the difficulties of mastering IBIS, it is easy to see that it offers considerable advantages over conventional methods of documenting discussions. Indeed, Rittel and Kunz were well aware of this. Their paper contains a nice summary of the advantages, which I paraphrase below:

- IBIS can bridge the gap between discussions and records of discussions (minutes, audio/video transcriptions etc.). IBIS sits between the two, acting as a short-term memory. The paper thus foreshadows the use of issue-based systems as an aid to organizational or project memory.

- Many elements (issues, ideas or arguments) that come up in a discussion have contextual meanings that are different from any pre-existing definitions. That is, the interpretation of points made or questions raised depends on the circumstances surrounding the discussion. What is more important is that contextual meaning is more important than formal meaning. IBIS captures the former in a very clear way – for example a response to a question “What do we mean by X?” elicits the meaning of X in the context of the discussion, which is then subsequently captured as an idea (position)”. I’ll have much more to say about this towards the end of this article.

- The reasoning used in discussions is made transparent, as is the supporting (or opposing) evidence.

- The state of the argument (discussion) at any time can be inferred at a glance (unlike the case in written records). See this post for more on the advantages of visual documentation over prose.

- Often times it happens that the commonality of issues with other, similar issues might be more important than its precise meaning. To quote from the paper, “…the description of the subject matter in terms of librarians or documentalists (sic) may be less significant than the similarity of an issue with issues dealt with previously and the information used in their treatment…” This is less of an issue now because of search of technologies. However, search technologies are still largely based on keywords rather than context. A properly structured, context-searchable IBIS-based archive would be more useful than a conventional document-based system. As I’ll discuss in the next section, the technology for this is now available.

To sum up, then: although IBIS offers a means to map out arguments what is lacking is the ability to make these maps available and searchable across an organization.

IBIS in the enterprise

It is interesting to note that Compendium, unlike its predecessor, Questmap, is a single-user, desktop tool – it does not, by itself, enable the sharing of maps across the enterprise. To be sure, it is possible work around this limitation but the workarounds are somewhat clunky. A recent advance in IBIS technology addresses this issue rather elegantly: Seven Sigma, an Australian consultancy founded by Paul Culmsee, Chris Tomich and Peter Chow, has developed Glyma (pronounced “glimmer”): a product that makes IBIS available on collaboration platforms like Microsoft SharePoint. This is a game-changer because it enables sharing and searching of IBIS maps across the enterprise. Moreover, as we shall see below, the implications of this go beyond sharing and search.

Full Disclosure: As regular readers of this blog know, Paul is a good friend and we have jointly written a book and a few papers. However, at the time of writing, I have no commercial association with Seven Sigma. My comments below are based on playing with beta version of the product that Paul was kind enough to give me to access to as well as some discussions that I have had with him.

The look and feel of Glyma is much the same as Compendium (see Fig 3 above) – and the keystrokes and shortcuts are quite similar. I have trialled Glyma for a few weeks and feel that the overall experience is actually a bit better than in Compendium. For example one can navigate through a series of maps and sub-maps using a breadcrumb trail. Another example: documents and videos are embedded within the map – so one does not need to leave the map in order to view tagged media (unless of course one wants to see it at a higher resolution).

I won’t go into any detail about product features etc. since that kind of information is best accessed at source – i.e. the product website and documentation. Instead, I will now focus on how Glyma addresses a longstanding gap in knowledge management systems.

Revisiting the problem of context

In most organisations, knowledge artefacts (such as documents and audio-visual media) are stored in hierarchical or relational structures (for example, a folder within a directory structure or a relational database). To be sure, stored artefacts are often tagged with metadata indicating when, where and by whom they were created, but experience tells me that such metadata is not as useful as it should be. The problem is that the context in which an artefact was created is typically not captured. Anyone who has read a document and wondered, “What on earth were the authors thinking when they wrote this?” has encountered the problem of missing context.

Context, though hard to capture, is critically important in understanding the content of a knowledge artefact. Any artefact, when accessed without an appreciation of the context in which it was created is liable to be misinterpreted or only partially understood.

Capturing context in the enterprise

Glyma addresses the issue of context rather elegantly at the level of the enterprise. I’ll illustrate this point an inspiring case study on the innovative use of SharePoint in education that Paul has written about some time ago.

The case study

Here is the backstory in Paul’s words:

Earlier this year, I met Louis Zulli Jnr – a teacher out of Florida who is part of a program called the Centre of Advanced Technologies. We were co-keynoting at a conference and he came on after I had droned on about common SharePoint governance mistakes…The majority of Lou’s presentation showcased a whole bunch of SharePoint powered solutions that his students had written. The solutions were very impressive…We were treated to examples like:

- IOS, Android and Windows Phone apps that leveraged SharePoint to display teacher’s assignments, school events and class times;

- Silverlight based application providing a virtual tour of the campus;

- Integration of SharePoint with Moodle (an open source learning platform)

- An Academic Planner web application allowing students to plan their classes, submit a schedule, have them reviewed, track of the credits of the classes selected and whether a student’s selections meet graduation requirements;

All of this and more was developed by 16 to 18 year olds and all at a level of quality that I know most SharePoint consultancies would be jealous of…

Although the examples highlighted by Louis were very impressive, what Paul found more interesting were the anecdotes that Lou related about the dedication and persistence that students displayed in their work. Quoting again from Paul,

So the demos themselves were impressive enough, but that is actually not what impressed me the most. In fact, what had me hooked was not on the slide deck. It was the anecdotes that Lou told about the dedication of his students to the task and how they went about getting things done. He spoke of students working during their various school breaks to get projects completed and how they leveraged each other’s various skills and other strengths. Lou’s final slide summed his talk up brilliantly:

- Students want to make a difference! Give them the right project and they do incredible things.

- Make the project meaningful. Let it serve a purpose for the campus community.

- Learn to listen. If your students have a better way, do it. If they have an idea, let them explore it.

- Invest in success early. Make sure you have the infrastructure to guarantee uptime and have a development farm.

- Every situation is different but there is no harm in failure. “I have not failed. I’ve found 10,000 ways that won’t work” – Thomas A. Edison

In brief: these points highlight the fact that Lou’s primary role as director of the center is to create the conditions that make it possible for students to do great work. The commercial-level quality of work turned out by students suggests that Lou’s knowledge on how to build high-performing teams is definitely worth capturing.

The question is: what’s the best way to do this (short of getting him to visit you and talk about his experiences)?

Seeing the forest for the trees

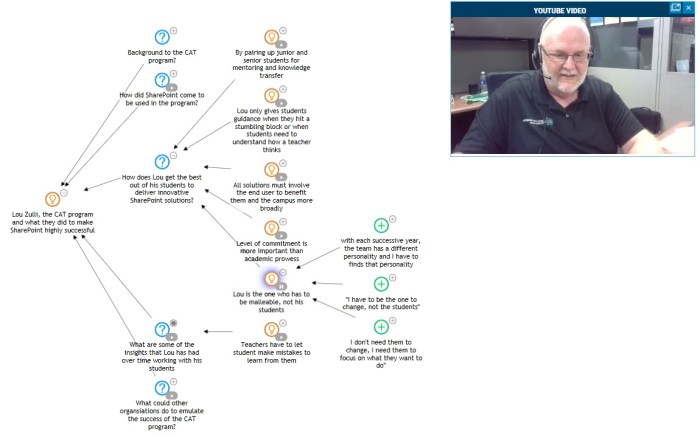

Paul recently interviewed Lou with the intent of documenting Lou’s experiences. The conversation was recorded on video and then “Glymafied” it – i.e the video was mapped using IBIS (see Figure 4 below).

There are a few points worth noting here:

- The content of the entire conversation is mapped out so one can “see” the conversation at a glance.

- The context in which a particular point (i.e. the content of a node) is made is clarified by the connections between a node and its neighbours. Moving left from a node gives a higher level picture, moving right drills down into details.

Of course, the reader will have noted that these are core IBIS capabilities that are available in Compendium (or any other IBIS tool). Glyma offers much more. Consider the following:

- Relevant documents or audio visual media can be tagged to specific nodes to provide supplementary material. In this case the video recording was tagged to specific nodes that related to points made in the video. Clicking on the play icon attached to such a node plays the segment in which the content of the node is being discussed. This is a really nice feature as it saves the user from having to watch the whole video (or play an extended game of ffwd-rew to get to the point of interest). Moreover, this provides additional context that cannot (or is not) captured by in the map. For example, one can attach papers, links to web pages, Slideshare presentations etc. to fill in background and context.

- Glyma is integrated with an enterprise content management system by design. One can therefore link map and video content to the powerful built-in search and content aggregation features of these systems. For example, users would be able enter a search from their intranet home page and retrieve not only traditional content such as documents, but also stories, reflections and anecdotes from experts such as Lou.

- Another critical aspect to intranet integration is the ability to provide maps as contextual navigation. Amazon’s ability to sell books that people never intended to buy is an example of the power of such navigation. The ability to execute the kinds of queries outlined in the previous point, along with contextual information such as user profile details, previous activity on the intranet and the area of an intranet the user is browsing, makes it possible to present recommendations of nodes or maps that may be of potential interest to users. Such targeted recommendations might encourage users to explore video (and other rich media) content.

Technical Aside: An interesting under-the-hood feature of Glyma is that it uses an implementation of a hypergraph database to store maps. (Note: this is a database that can store representations of graphs (maps) in which an edge can connect to more than 2 vertices). These databases enable the storing of very general graph structures. What this means is that Glyma can be extended to store any kind of map (Mind Maps, Concept Maps, Argument Maps or whatever)…and nodes can be shared across maps. This feature has not been developed as yet, but I mention it because it offers some exciting possibilities in the future.

To summarise: since Glyma stores all its data in an enterprise-class database, maps can be made available across an organization. It offers the ability to tag nodes with pretty much any kind of media (documents, audio/video clips etc.), and one can tag specific parts of the media that are relevant to the content of the node (a snippet of a video, for example). Moreover, the sophisticated search capabilities of the platform enable context aware search. Specifically, we can search for nodes by keywords or tags, and once a node of interest is located, we can also view the map(s) in which it appears. The map(s) inform us of the context(s) relating to the node. The ability to display the “contextual environment” of a piece of information is what makes Glyma really interesting.

In metaphorical terms, Glyma enables us to see the forest for the trees.

…and so, to conclude

My aim in this post has been to introduce readers to the IBIS notation and trace its history from its origins in issue mapping to recent developments in knowledge management. The history of a technique is valuable because it gives insight into the rationale behind its creation, which leads to a better understanding of the different ways in which it can be used. Indeed, it would not be an exaggeration to say that the knowledge management applications discussed above are but an extension of Rittel’s original reasons for inventing IBIS.

I would like to close this piece with a couple of observations from my experience with IBIS:

Firstly, the real surprise for me has been that the technique can capture most written arguments and conversations, despite having only three distinct elements and a very simple grammar. Yes, it does require some thought to do this, particularly when mapping discussions in real time. However, this cognitive overhead is good because it forces the mapper to think about what’s being said instead of just writing it down blind. Thoughtful transcription is the aim of the game. When done right, this results in a map that truly reflects an understanding of a complex issue.

Secondly, although most current discussions of IBIS focus on its applications in dialogue mapping, it has a more important role to play in mapping organizational knowledge. Maps offer a powerful means to navigate the complex network of knowledge within an organisation. The (aspirational) end-goal of such an effort would be a “global” knowledge map that highlights interconnections between different kinds of knowledge that exists within an organization. To be sure, such a map will make little sense to the human eye, but powerful search capabilities could make it navigable. To the extent that this is a feasible direction, I foresee IBIS becoming an important skill in the repertoire of knowledge management professionals.

An introduction to emergent design

Note: For an updated piece on emergent design please see: https://eight2late.wordpress.com/2023/05/24/what-is-emergent-design/

Introduction

Over the last few months, I’ve published a number of posts in which the term emergent design makes a cameo appearance (see this article or this interview for example). Some readers may have noticed that although the term is used in various contexts in the articles/interviews, it is not explicitly defined anywhere. This is deliberate. Emergent design is…well, emergent, so a detailed definition is neither necessary nor useful – providing one can describe a set of guidelines for its practice. My main aim in this post is to do just that. To keep things concrete I will discuss the guidelines in the context of technical initiatives in organisations.

(Note: Before going any further a couple of clarifications are in order. Firstly, the word emergent as used here has nothing to do with emergence in complex systems. Secondly, the guidelines provided here are a starting point, not a comprehensive list.)

The wickedness of technology

Most technical initiatives in large organisations are planned, designed and executed in a top-down manner, with little or no attempt to understand the existing culture and / or on-the-ground realities. This observation applies not only to enterprise software projects, such as those involving Collaboration or Customer Relationship Management platforms, but also to the establishment of new functions such as building a data science capability.

Top down approaches are liable to fail because technical initiatives display many of the characteristics of wicked problems. In particular, they are:

- Are one-shot operations – for example, an ERP system is simply too expensive to implement over and over again.

- Have no stopping rule – technical systems are never completely done; there are always things to be fixed and additional features to be implemented.

- Are highly contentious – whether or not an initiative is good, or even necessary, depends on who you ask.

- Could be done in other, possibly “better”, ways – and the problem is that one person’s “better” is another one’s “worse”!

- Are essentially unique – and don’t let vendors or Big $$$ consultants tell you otherwise!

These characteristics make technical problems socially complex – that is, different stakeholder groups have different perceptions of the problem that the initiative is intended to address. The most important implication of social complexity is that it cannot be tackled using rational methods of planning, design and implementation that are taught in schools, propagated in books, or evangelized by standards authorities and assorted snake oil salespersons. For these reasons, it is more accurate to refer to such problems as sociotechnical problems.

Enter emergent design

The term emergent design was coined by David Cavallo in the context of technology-driven education reforms in indigenous cultures (the original paper by David Cavallo can be accessed here). Cavallo observed that traditional systems engineering approaches that attempt to change an educational system in a top-down manner fail primarily because they do not take into account the unique features of local cultures. Instead, he found that using the existing culture as a starting point from which to work towards systemic change offered a much better chance of the new ways taking root. In his words, “[the] adoption and implementation of new methodologies needs to be based in, and grow from, the existing culture.”

Cavallo’s words hold the key to applying emergent design in the context of sociotechnical initiatives: it is to take the concerns of those affected by the initiatives seriously.

“Ah, so it’s like Agile software development,” concludes the Agilista.

Not quite. As I will discuss in the remainder of this post, although emergent design shares a few number features with Agile methods, there’s considerably more to it than that. That said, chances are that good Agile coaches are emergent design practitioners without knowing it. This is something that will become apparent as we go on.

Guidelines for emergent design

I have, for a while, been thinking about what emergent design means in the context of sociotechnical initiatives in organisations. Among other things, I have been looking at how it might be applied to a wide variety initiatives that are traditionally planned upfront – things such as offshoring and enterprise-wide projects such as data warehouse or enterprise resource planning initiatives.

In one of those serendipitous occurrences, last week I happened re-read an old series of articles entitled Confessions of a post-SharePoint Architect written by my friend, the ace sensemaker and emergent entrepreneur, Paul Culmsee. Although the series focuses on emergent design principles in the context of the Microsoft SharePoint platform, many of the points that Paul makes apply to sociotechnical initiatives in general. In addition to material drawn from Paul’s blog, I also borrow from a few posts on my blog. In the interests of space I have provided only a brief overview of the points because they have been elaborated elsewhere. The original pieces fill in a lot of relevant detail, so please do follow the links if you have the inclination and the time.

With that said, let’s get to it.

Be a midwife rather than an expert

In a paper entitled, On the planning crisis, Horst Rittel (the man who coined the term wicked problem) wrote:

You do not learn in school how to deal with wicked problems…expertise and ignorance is distributed over all participants in a wicked problem. There is a symmetry of ignorance among those who participate because nobody knows better by virtue of his degrees or his status. There are no experts (which is irritating for experts), and if experts there are, they are only experts in guiding the process of dealing with a wicked problem, but not for the subject matter of the problem.

The first guideline of emergent design is to realize that no one is an expert – not you nor your Big $$$ consultant. The only way to build a robust and lasting system or process is for everyone to put their heads together and work towards it in a collaborative fashion, dispensing with the pretence that one can outsource one’s thinking to the “expert”. Indeed, the role of the “expert” is to create and foster the conditions for such collaboration to occur. Paul and I elaborate on this point at length in our book and this paper (summarized in this post).

In brief, the knowledge required to successfully implement a sociotechnical initiative is distributed across all stakeholders (analysts, consultants, architects and, above all, users). Pulling all this together into a coherent whole has more to do with facilitation and people skills than technology.

Ensure that governance is about enablement rather than control

Most organisations have onerous procedures to ensure that people do the right thing. I submit that many of these encourage a checkbox-based compliance mentality aimed at ensuring that people comply in letter, but not necessarily in spirit (actually, never in spirit). As Paul mentions in this post governance ought to be about enablement rather than compliance or control.

There’s a very simple test to tell one from the other: when you come across a procedure such as an SOP or a methodology that you are required to follow, ask yourself this question: does this help me do my job?

If the answer positive, the procedure is an enabler; if not, it is likely a control that is primarily intended as a CYA mechanism.

Do not penalize people for learning

The main rationale behind iterative and incremental approaches to technical projects is that they encourage (and take advantage) of continuous learning. Incremental increases in functionality are easier to test exhaustively and errors are also easier to correct. Reviews and retrospectives also tend to be more focused leading to a better chance of lessons actually being learnt. Thanks to the Agile movement, this is now well known and understood in the world of tech.

However, learning is not just a matter of using iterative/incremental methodologies; one also needs to build an environment that encourages it. This is trickier matter because it depends on things that are outside an individual manager’s control; indeed it has more to do with the entire function or even the organization. In an organisation with a strong blame culture, the culture tends to win against pretty much any methodology, agile or otherwise. Blame cultures preclude learning because mistakes are punished and people are scapegoated as a result. Check out this article on learning organizations for more on this topic, and this post for a more nuanced (realistic?) view.

With that said for the importance of learning, it is also important to note that there are some situations where learning is less important. This is the case for work for that can be planned and scripted in detail up front. It is important to be able to distinguish between the two types of situations…which brings us to the next point.

Understand the difference between complicated and complex initiatives

Requirements analysis is one of the first activities in traditional system development. Most technical initiatives that are driven by a vendor or consultant will have many sessions for this at the front-end of an engagement. Enterprise wisdom tells us that things need to be specified in detail at the start. The rationale behind this is to set requirements in stone so that the entire project can be planned in detail before actual implementation begins. Such an approach is fine if one knows for sure:

- How the future is going to unfold and has appropriate contingencies in place for adverse events;

- That users have a clear idea of what they want, and

- That requirements will not change (or will change minimally).

It is obvious that this approach will be disastrous if any of the above assumptions are incorrect. Unfortunately it is more often the case that the assumptions do not hold for sociotechnical initiatives.

So how does one distinguish between initiatives that can be planned in detail upfront and those that can’t?

The distinction is best illustrated via an example: consider a project to replace a fixed line phone system by VoIP versus an initiative to set up a data science function. The first project has a fixed set of requirements across different groups. The second one, in contrast, involves diverse stakeholder groups, each with their own unique expectations of the system. Both projects are complicated from the technology point of view, but the second one has elements of wickedness arising from social complexity. Consequently, the two projects cannot be run in the same way. In particular, the first one can be planned in detail upfront while the second one cannot. Borrowing from David Snowden’s Cynefin framework, we call the first type of project complicated and the second one complex. You need to understand which kind of initiative you are dealing with before deciding which approach would be appropriate.

Beware of platitudinous goals

The technology marketplace is one that is largely buzzword driven. An in-vogue buzzwords at the time this piece was written is AI. Buzzwords, while sounding “right”, are actually platitudes – words that are devoid of meaning because different people interpret and use them differently. The use of platitudes, therefore, results in confusion rather than clarity. For example, does it mean (in the context of an organisation) to “implement AI”?

People tend to use platitudes as mental shortcuts to avoid thinking things through and coming up with their own opinions. It is therefore pointless to ask a person who uses a platitude to clarify what he or she means: they have not thought it through and will therefore be unable to give you a good answer.

The best way to deconstruct a platitude is via an oblique approach that is best illustrated through an example.

Say someone tells you that they want to implement AI. Asking them to define AI is not a good way to go because the answer you get is likely to be couched in further platitudes such as higher automation and insight. Instead, it is better to ask them what difference would be apparent if AI were to be implemented. To answer this question, they would have to come down from platitude-land and start thinking about concrete, measurable outcomes.

Use open questions to broaden perspectives

A more general point to note from the foregoing is that the framing of questions matters, particularly when one wants people to come up with ideas. For example, instead of asking people what they like (or dislike) about a particular approach, it is generally better to ask them what aspects of the approach are important to them. The latter question is neutrally framed so it does not impose any constraints on their thinking

Another good way to get people thinking about possibilities is to ask them what they would like to do if there were no constraints (such as budget or time, say). Conversely, if you encounter a constraining factor (like a company policy), it is sometimes helpful to ask what the intent behind the policy is.

If posed in the right way and in the right environment, answers to such questions get people to think beyond their immediate concerns and focus on purposes and outcomes instead.

Check out Paul’s posts on powerful questions to find out more about these perspective-expanding questions.

Understand the need for different types of thinking

One of the ironies of sociotechnical initiatives is that the most important decisions have to be made when the information available is the least. As I wrote in the introduction to this paper,

The early stages of projects are fraught with ambiguity. Yet, it is at this “front end” of projects that the most important decisions have to be made. Front-end decisions are hard because there is:

- uncertainty about scope, i.e. about what needs to be done;

- uncertainty about rationale, i.e. why it needs to be done; and

- uncertainty about approach, i.e. how it should be done.

Arguably, the lack of clarity regarding any of these can sow the seeds of failure in the early stages of a project.

The standard approach is to treat uncertainty as a problem that can be solved through convergent thinking – i.e. the kind of thinking that assumes a problem has a single “correct answer.” However, the uncertainty associated with sociotechnical projects has a social dimension; different people have different perceptions of things like scope and rationale, as well as different coping mechanisms for ambiguity. Thus sociotechnical uncertainty is a wicked problem that has no single “right answer.” This can cause anxiety for some. One therefore needs to begin with divergent thinking, which is largely about generating ideas and options and move to convergent thinking only when:

- The group has a shared understanding of the main issues.

- An adequate set of options have been generated.

As I alluded to above, people tend to show a preference for one type of thinking over the other. The strength of collaborative problem solving lies precisely in the fact that a group is likely to have a mix of the two types of thinkers.

It is perhaps obvious, but still worth mentioning that the other standard way to deal with uncertainty is to impose a solution by diktat or governance. Clearly such approaches are doomed to fail because they suppress the problem instead of solving it.

Consider long term consequences

It is an unfortunate fact of life that cost tends to be the ultimate arbiter on organisational decisions. Vendors know this, and take advantage of it when crafting their proposals. The contract goes to the lowest bidder and the rest, as they say, is tragedy. Although cost is an important criterion in technical decisions, making it the overriding concern is a recipe for future disappointment.

The general lesson to draw from this is that one must consider the longer-term consequences of one’s choices. This can be hard to do because the distant future is less salient than the present or the immediate future. A good way to look beyond immediate concerns (such as cost) is to use the solution after next principle proposed by Gerald Nadler and Shozo Hibino in their book, Breakthrough Thinking. The basic idea behind the principle is to get people to focus on the goals that lie beyond the immediate goal. The process of thinking about and articulating longer-term goals can often provide insights into potential problems with the current goals and/or how they are being achieved.

Build in spare capacity

In his book on antifragility, Nicholas Taleb points out that the opposite of fragility is not robustness or resilience, rather it is the ability to thrive on or benefit from uncertainty. There is no word in the English language to describe such behavior, and that is what led him to coin the term antifragile.

In a post inspired by the book, I outlined the elements of an antifragile IT strategy. One of the key points of such a strategy is the assumption that, despite our best laid plans, it is inevitable that something important will have been overlooked. It is therefore important to build in some spare capacity to deal with the unexpected events and demands.

Design so as to increase your future choices

This is perhaps the most important point in my list because it encapsulates all the other points. I have adapted it from Heinz von Foerster’s ethical imperative which states that one should always act so as to increase the number of choices in the future. This principle is useful as a tiebreaker between two designs that are close in all other respects. However, there is more to it than just that. I have found it particularly useful in making decisions that have wider, social consequences beyond technology. There is very little critical scrutiny of the benefits of these as claimed by vendors and advisories. This principle can help you see through the fog of marketing rhetoric and advisory hype.

Parting thoughts

One of the paradoxes of life is that the harder we strive for something – money, happiness or whatever – the more unattainable it seems to become. Indeed, some of the most financially successful people (Bill Gates and Warren Buffett, for example) became rich by doing what they loved. Their financial success was a happy byproduct of their engagement in their work. The economist John Kay formalized this paradoxical notion in his concept of obliquity – that certain goals are best attained via indirect means.

If you have been patient enough to read through this piece, you will have noted that some of the guidelines listed above have a hint of obliquity about them. This is no surprise; indeed it is inevitable in a design approach that values people over processes and improvisation (or even serendipity) over planning.

I usually conclude my posts with a summary of the main message. For reasons that should be obvious I will not do that here. Instead, I will end by pointing out yet another feature of emergent design that you have likely figured out already: the guidelines listed above are actually domain neutral; they can be applied to pretty much any area of endeavour. This is no surprise: wicked problems aren’t domain specific and therefore neither are the techniques to deal with them. For example, see my interview with Neil Preston for a perspective on emergent design in organizational change and my book co-authored with Paul for ways in which it can be applied to domains as diverse as town planning and knowledge management.

…and now I really must stop for I have gone on too long. Many thanks for your patience!

Acknowledgement

My thanks go out to Paul Culmsee for his feedback on a draft version of this post.

Six heresies for enterprise architecture

Introduction

In a recent article in Forbes, Jason Bloomberg asks if Enterprise Architecture (EA) is “completely broken”. He reckons it is, and that EA frameworks, such as TOGAF and the Zachman Framework, are at least partly to blame. Here’s what he has to say about frameworks:

EA generally centers on the use of a framework like The Open Group Architecture Framework (TOGAF), the Zachman Framework™, or one of a handful of others. Yet while the use of such frameworks can successfully lead to business value, [they] tend to become self-referential… that [Enterprise Architects end up] spending all their effort working with the framework instead of solving real problems.

From experience, I’d have to agree: many architects are so absorbed by frameworks that they overlook their prime imperative, which is to deliver tangible value rather than pretty diagrams.

In this post I present six (possibly heretical!) practices that underpin an evolutionary or emergent approach to enterprise architecture. I believe that these will go some way towards addressing the issues that Bloomberg and others bemoan.

The heresies

Here are they are, in no particular order:

1. Use an incremental approach

As Bloomberg notes in the passage above, many enterprise architects spend a great deal of their time creating blueprints and plans based on frameworks. The problem is, this activity rarely leads to anything of genuine business value because blueprints or plans are necessarily:

- Incomplete – they are high-level abstractions that omit messy, ground-level details. Put another way, they are maps that should not be confused with the territory.

- Static – they are based on snapshots of an organization and its IT infrastructure at a point in time. Even roadmaps that are intended to describe how organisations’ processes and technologies will evolve in time are, in reality, extrapolations from information available at a point in time.

The straightforward way to address this is to shun big architectures upfront and embrace an iterative and incremental approach instead. The Agile movement has shown the way forward for software development. There are many convincing real-life demonstrations of the use of agile approaches to enterprise-scale initiatives (Ralph Hughes’ approach to Agile data warehousing, for example). Such examples suggest that the principles behind agile software development can be fruitfully adapted to the practice of enterprise architecture.

The key principle is to start as small as possible and scale-up from there. Such an approach would enable architects to learn along the way, giving them a better chance of getting their heads around those messy but important details that get overlooked when one considers the entire enterprise upfront. Another benefit is that it would give them adequate time to develop an awareness of the factors that are prone to change frequently and those that will remain relatively static. Most important, though, is that it would offer several opportunities to correct errors and fine tune things at little extra cost.

2. Understand the unique features of your organisation

Enterprise architects tend to be people with a wide experience in technology. As a result they are often treated (and see themselves) as experts. The danger of this attitude is that it can lead them to believe that they have privileged insights into the ills of their organizations. A familiarity with frameworks tends to reinforce this view because frameworks, with their focus on standardization, tend to emphasise the common features of organisations. However, I believe that instead of focusing on similarities an enterprise architect would be better served by focusing on differences – the things that make his or her organisation unique. Only when one develops an understanding of differences can one start to build an architecture that actually supports the organization.

To be sure, there are structures and processes that are common to many organisations (for example, functions such as 1st level support or expense reimbursement processes) and these could potentially benefit from the implementation of standardized designs / practices. However, the identification of such commonalities should be an outcome of research rather than an upfront assumption. And this requires ongoing, open dialogue with key stakeholders in the business (more on that later)

3. Form your own opinions about what works and what doesn’t

The surfeit of standardized “best practice” approaches to EA tends to make enterprise architects intellectually lazy. This is actually a symptom of a wider malaise in IT: I have noticed that professionals in the upper echelons of IT are happy to “outsource their thinking” by following someone else’s advice or procedures without much thought. The problem with this attitude, penalty clauses notwithstanding, is that one cannot “outsource the blame” when things go wrong.

I therefore believe one of the key attributes of a good architect is to develop a critical attitude towards frameworks, “best practices” and even consulting advice (especially if it comes from a Big 4 consultancy or guru). If you’re really as experienced as you claim to be, you need to develop your own opinions of what works and what doesn’t and be willing to subject those opinions to scrutiny by others.

4. Understand your organisation’s constraints

Humans tend to be over-optimistic when it comes to planning: witness the developer who blithely tells his boss that it will take a week to do a certain task…and then takes a month because of problems he had not anticipated. Similarly, many architects create designs that look great on paper but are not realistic because they have overlooked potential organizational constraints. Examples of such constraints include:

- Top-down reporting structures in which all decisions have to be made or approved by top management.

- Rigid organizational hierarchies that discourage cross-functional communication and collaboration.

Although constraints can also be technical (such as the limitations of a particular technology), the above examples illustrate that organizational constraints are considerably harder to address, primarily because they involve changing behaviour of influential people within the organization.

Architects lack the positional authority to do anything about such constraints directly. However, they need to develop an understanding of the main constraints so that they can bring them to the attention of those who can do something about them.

5. Focus on problem finding rather than problem solving

In his book, Management in Small Doses, Russell Ackoff wrote that in the real world problems are seldom given; they must be taken. This is good advice for enterprise architects to live by. Indeed, one of the shortcomings of frameworks is that they tend to present the architect with ready-made problems – specifically, any process or technology that does not comply with the framework is seen as a problem. Consequently, many architects spend considerable effort in fixing such “deviations”. However, non-conforming processes or technologies are seldom the most pressing problems that the organization faces. Instead, an enterprise architect will be better served by of finding problems that actually need fixing.

6. Understand the social implications of enterprise architecture

Enterprise architecture is seen as a primarily technical undertaking. As a result architects often overlook the social implications of their designs. Here are a couple of common ways in which this happens:

- Enterprise architecture invariably involves trade-offs. When evaluating these, architects typically focus on economic variables such as cost, productivity, efficiency, throughput etc., ignoring human factors such as ease of use and user preferences.

- Any design choice will (usually) benefit certain stakeholders while disadvantaging others.

Architects need to remember that their plans and designs are going to change the way people work. Nobody likes change without consultation, so it critical to seek as wide a spectrum of opinions as possible before making important design decisions. One can never satisfy everyone, but one has a better chance of making sensible compromises if one knows where all the stakeholders stand.

In closing

To summarise: enterprise architects need to take an emergent approach to design. Such an approach proceeds in an incremental fashion, pays due attention to the unique features and constraints of an organization, focuses on real rather than imagined problems…and, above all, acknowledges that the success or failure of enterprise architecture rests neither on frameworks nor technology, but on people. My (admittedly limited) experience suggests that such an approach would be more fruitful than the framework-driven approaches that have come to dominate the profession.