On the physical origins of modern AI – an explainer on the 2024 Nobel for physics

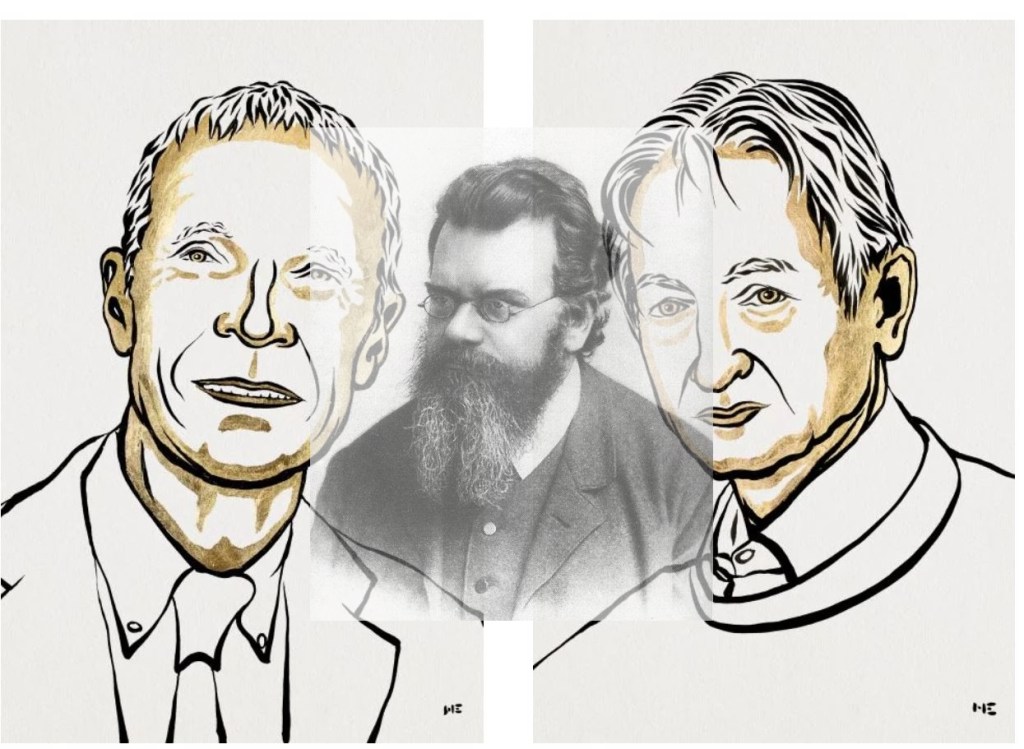

My first reaction when I heard that John Hopfield and Geoffrey Hinton had been awarded the Physics Nobel was – “aren’t there any advances in physics that are, perhaps, more deserving?” However, after a bit of reflection, I realised that this was a canny move on part of the Swedish Academy because it draws attention to the fact that some of the inspiration for the advances in modern AI came from physics. My aim in this post is to illustrate this point by discussing (at a largely non-technical level) two early papers by the laureates which are referred to in the Nobel press release.

The first paper is “Neural networks and physical systems with emergent collective computational abilities“, published by John Hopfield in 1982 and the second is entitled, “A Learning Algorithm for Boltzmann Machines,” published by David Ackley, Geoffrey Hinton and Terrence Sejnowski in 1985.

–x–

Some context to begin with: in the early 1980s, new evidence on the brain architecture had led to a revived interest in neural networks, which were first proposed by Warren McCulloch and Walter Pitts in this paper in the early 1940s! Specifically, researchers realised that, like brains, neural networks could potentially be used to encode knowledge via weights in connections between neurons. The search was on for models / algorithms that demonstrated this. The papers by Hopfield and Hinton described models which could encode patterns that could be recalled, even when prompted with imperfect or incomplete examples.

Before describing the papers, a few words on their connection to physics:

Both papers draw on the broad field of Statistical Mechanics, a branch of physics that attempts to derive macroscopic properties of objects such as temperature and pressure from the motion of particles (atoms, molecules) that constitute them.

The essence of statistical mechanics lies in the empirically observed fact that large collections of molecules, when subjected to the same external conditions, tend to display the same macroscopic properties. This, despite the fact the individual molecules are moving about at random speeds and in random directions. James Clerk Maxwell and Ludwig Boltzmann derived the mathematical equation that describes the fraction of particles that have a given speed for the special case of an ideal gas – i.e., a gas in which there are no forces between molecules. The equation can be used to derive macroscopic properties such as the total energy, pressure etc.

Other familiar macroscopic properties such as magnetism or the vortex patterns in fluid flow can (in principle) also be derived from microscopic considerations. The key point here is that the collective properties (such as pressure or magnetism) are insensitive to microscopic details of what is going in the system.

With that said about the physics connection, let’s move on to the papers.

–x–

In the introduction to his paper Hopfield poses the following question:

“…physical systems made from a large number of simple elements, interactions among large numbers of elementary components yield collective phenomena such as the stable magnetic orientations and domains in a magnetic system or the vortex patterns in fluid flow. Do analogous collective phenomena in a system of simple interacting neurons have useful “computational” correlates?”

The inspired analogy between molecules and neurons led him to propose the mathematical model behind what are now called Hopfield Networks (which are cited in the Nobel press release). Analogous to the insensitivity of the macroscopic properties of gases to the microscopic dynamics of molecules, he demonstrated that a neural network in which each neuron has simple properties can display stable collective computational properties. In particular the network model he constructed displayed persistent “memories” that could be reconstructed from smaller parts of the network. As Hopfield noted in the conclusion to the paper:

“In the model network each “neuron” has elementary properties, and the network has little structure. Nonetheless, collective computational properties spontaneously arose. Memories are retained as stable entities or Gestalts and can be correctly recalled from any reasonably sized subpart. Ambiguities are resolved on a statistical basis. Some capacity for generalization is present, and time ordering of memories can also be encoded.”

–x–

Hinton’s paper, which followed Hopfield’s by a couple of years, describes a network “that is capable of learning the underlying constraints that characterize a domain simply by being shown examples from the domain.” Their network model, which they christened “Boltzmann Machine” for reasons I’ll explain shortly, could (much like Hopfield’s model) reconstruct a pattern from a part thereof. In their words:

“The network modifies the strengths of its connections so as to construct an internal generative model that produces examples with the same probability distribution as the examples it is shown. Then, when shown any particular example, the network can “interpret” it by finding values of the variables in the internal model that would generate the example. When shown a partial example, the network can complete it by finding internal variable values that generate the partial example and using them to generate the remainder.”

They developed a learning algorithm that could encode the (constraints implicit in a) pattern via the connection strengths between neurons in the network. The algorithm worked but converged very slowly. This limitation is what led Hinton to later invent Restricted Boltzmann Machines, but that’s another story.

Ok, so why the reference to Boltzmann? The algorithm (both the original and restricted versions) uses the well-known gradient descent method to minimise an objective function, which in this case is an expression for energy. Students of calculus will know that gradient descent is prone to getting stuck in local minima. The usual way to get out of these minima is to take occasional random jumps to higher values of the objective function. The decision rule Hinton and Co use to determine whether or not to make the jump is such that the overall probabilities of the two states converges to a Boltzmann distribution (different from, but related to, the Maxwell-Boltzmann distribution mentioned earlier). It turns out that the Boltzmann distribution is central to statistical mechanics, but it would take me too far afield to go into that here.

–x–

Hopfield networks and Boltzmann machines may seem far removed from today’s neural networks. However, it is important to keep in mind that they heralded a renaissance in connectionist approaches to AI after a relatively lean period in the 1960s and 70s. Moreover, vestiges of the ideas implemented in these pioneering models can be seen in some popular architectures today ranging from autoencoders to recurrent neural networks (RNNs).

That’s a good note to wrap up on, but a few points before I sign off:

Firstly, a somewhat trivial matter: it is simply not true that physics Nobels have not been awarded for applied work in the past. A couple of cases in point are the 1909 prize awarded to Marconi and Braun for the invention of wireless telegraphy, and the 1956 prize to Shockley, Bardeen and Brattain for the discovery of the transistor effect, the basis of the chip technology that powers AI today.

Secondly: the Nobel committee, contrary to showing symptoms of “AI envy”, are actually drawing public attention to the fact that the practice of science, at its best, does not pay heed to the artificial disciplinary boundaries that puny minds often impose on it.

Finally, a much broader and important point: inspiration for advances in science often come from finding analogies between unrelated phenomena. Hopfield and Hinton’s works are not rare cases. Indeed, the history of science is rife with examples of analogical reasoning that led to paradigm-defining advances.

–x–x–

On being a human in the loop

A recent article in The Register notes that Microsoft has tweaked its fine print to warn users not to take its AI seriously. The relevant update to the license terms reads, “AI services are not designed, intended, or to be used as substitutes for professional advice.”

Aside from the fact that users ought not to believe everything a software vendor claims, Microsoft’s disingenuous marketing is also to blame. For example, their website currently claims that, “Copilot…is unique in its ability to understand … your business data, and your local context.” The truth is that the large language models (LLMs) that underpin Copilot understand nothing.

Microsoft has sold Copilot to organisations by focusing on the benefits of generative AI while massively downplaying its limitations. Although they recite the obligatory lines about having a human in the loop, they neither emphasise nor explain what this entails.

–x–

As Subbarao Kambhampati notes in this article LLMs should be treated as approximate retrieval engines. This is worth keeping in mind when examining vendor claims.

For example, one of the common use cases that Microsoft touts in its sales spiel is that generative AI can summarise documents.

Sure, it can.

However, given that LLMs are approximate retrieval engines how much would you be willing trust an AI-generated summary? The output of an LLM might be acceptable for a summary that will go no further than your desktop or may be within your team, but would you be comfortable with it going to your chief executive without thorough verification?

My guess is that you would want to be a (critically thinking!) human in that loop.

–x–

In a 1983 paper entitled, The Ironies of Automation, Lisanne Bainbridge noted that:

“The classic aim of automation is to replace human manual control, planning and problem solving by automatic devices and computers. However….even highly automated systems, such as electric power networks, need human beings for supervision, adjustment, maintenance, expansion and improvement. Therefore one can draw the paradoxical conclusion that automated systems still are man-machine systems, for which both technical and human factors are important. This paper suggests that the increased interest in human factors among engineers reflects the irony that the more advanced a control system is, so the more crucial may be the contribution of the human operator.”

Bainbridge’s paper presaged many automation-related failures that could have been avoided with human oversight. Examples range from air disasters to debt recovery and software updates.

–x–

Bainbridge’s prescient warning about the importance of the human in the loop has become even more important in today’s age of AI. Indeed, inspired by Bainbridge’s work, Dr. Mica Endsley recently published a paper on the ironies of AI. In the paper, she lists the following five ironies:

AI is still not that intelligent: despite hype to the contrary, it is still far from clear that current LLMs can reason. See, for example, the papers I have discussed in this piece.

The more intelligent and adaptive the AI, the less able people are to understand the system: the point here being that the more intelligent/adaptive the system, the more complex it is – and therefore harder to understand.

The more capable the AI, the poorer people’s self-adaptive behaviours for compensating for shortcomings: As AIs become better at what they do, humans will tend to offload more and more of their thinking to machines. As a result, when things go wrong, humans will find themselves less and less able to take charge and fix issues.

The more intelligent the AI, the more obscure it is, and the less able people are to determine its limitations and biases and when to use the AI: as AIs become more capable, their shortcomings will become less obvious. There are at least a couple of reasons for this: a) AIs will become better at hiding (or glossing over) their limitations and biases, and b) the complexity of AIs will make it harder for users to understand their workings.

The more natural the AI communications, the less able people are to understand the trustworthiness of the AI: good communicators are often able to trick people into believing or trusting them. It would be exactly the same for a sweet-talking AI.

In summary: the more capable the AI, the harder it is to be a competent human in the loop, but the more critical it is to be one.

–x–

Over the last three years, I’ve been teaching a foundational business analytics course at a local university. Recently, I’ve been getting an increasing number of questions from students anxious about the implications of generative AI for their future careers. My responses are invariably about the importance of learning how to be a thinking human in the loop.

This brings up the broader issue of how educational practices need to change in response to the increasing ubiquity of generative AI tools. Key questions include:

- How should AI be integrated into tertiary education?

- How can educators create educationally meaningful classroom activities which involve the use AI?

- How should assessments be modified to encourage the use of AI in ways that enhance learning?

These are early days yet, but some progress has been made on addressing each of the above. For examples see:

- The following paper, based on experiences of introducing AI at a Swiss university describes how to integrate AI into university curricula: https://link.springer.com/article/10.1186/s41239-024-00448-3

- This paper by Ethan and Lilach Mollick provides some interesting creative examples of AI use in the class: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4391243

- This document, by the Australian Tertiary Education Quality and Standards Agency, provides guidance on how AI can be integrated into university assessments: https://www.teqsa.gov.au/sites/default/files/2023-09/assessment-reform-age-artificial-intelligence-discussion-paper.pdf

However, even expert claims should not be taken at face value. An example might help illustrate why:

In his bestselling book on AI, Ethan Mollick gives an example of two architects learning their craft after graduation. One begins his journey by creating designs using traditional methods supplemented by study of good designs and feedback from an experienced designer. The other uses an AI-driven assistant that highlights errors and inefficiencies in his designs and suggests improvements. Mollick contends that the second architect’s learning would be more effective and rapid than that of the first.

I’m not so sure.

A large part of human learning is about actively reflecting on one’s own ideas and reasoning. The key word here is “actively” – meaning that thinking is done by the learner. An AI assistant that points out flaws and inefficiencies may save the student time, but it also detracts from learning because the student is also “saved” from the need to reflect on their own thinking.

–x–

I think it is appropriate to end this piece by quoting from a recent critique of AI by the science fiction writer, Ted Chiang:

“The point of writing essays is to strengthen students’ critical-thinking skills; in the same way that lifting weights is useful no matter what sport an athlete plays, writing essays develops skills necessary for whatever job a college student will eventually get. Using ChatGPT to complete assignments is like bringing a forklift into the weight room; you will never improve your cognitive fitness that way.”

So, what role does AI play in your life: assistant or forklift?

Are you sure??

–x–x–

Acknowledgement: This post was inspired by Sandeep Mehta’s excellent article on the human factors challenge posed by AI systems.

Can Large Language Models reason?

There is much debate about whether Large Language Models (LLMs) have reasoning capabilities: on the one hand, vendors and some researchers claim LLMs can reason; on the other, there are others who contest these claims. I have discussed several examples of the latter in an earlier article, so I won’t rehash them here. However, the matter is far from settled: the debate will go on because new generations of LLMs will continue to get better at (apparent?) reasoning.

It seems to me that a better way to shed light on this issue would be to ask a broader question: what purpose does language serve?

More to the point: do humans use language to think or do they use it to communicate their thinking?

Recent research suggests that language is primarily a tool for communication, not thought (the paper is paywalled, but a summary is available here). Here’s what one of the authors says about this issue:

““Pretty much everything we’ve tested so far, we don’t see any evidence of the engagement of the language mechanisms [in thinking]…Your language system is basically silent when you do all sorts of thinking.”

This is consistent with studies on people who have lost the ability to process words and frame sentences, due to injury. Many of them are still able to do complex reasoning tasks such as play chess or solve puzzles, even though they cannot describe their reasoning in words. Conversely, the researchers find that intellectual disabilities do not necessarily impair the ability to communicate in words.

–x–

The notion that language is required for communicating but not for thinking is far from new. In an essay published in 2017, Cormac McCarthy noted that:

“Problems in general are often well posed in terms of language and language remains a handy tool for explaining them. But the actual process of thinking—in any discipline—is largely an unconscious affair. Language can be used to sum up some point at which one has arrived—a sort of milepost—so as to gain a fresh starting point. But if you believe that you actually use language in the solving of problems I wish that you would write to me and tell me how you go about it.”

So how and why did language arise? In his epic book, The Symbolic Species, the evolutionary biologist and anthropologist Terrence Deacon suggests that it arose out of the necessity to establish and communicate social norms around behaviours, rights and responsibilities as humans began to band into groups about two million years ago. The problem of coordinating work and ensuring that individuals do not behave in disruptive ways in large groups requires a means of communicating with each other about specific instances of these norms (e.g., establishing a relationship, claiming ownership) and, more importantly, resolving disputes around perceived violations. Deacon’s contention is that language naturally arose out of the need to do this.

Starting with C. S. Pierce’s triad of icons, indexes and symbols, Deacon delves into how humans could have developed the ability to communicate symbolically. Symbolic communication is based on the powerful idea that a symbol can stand for something else – e.g., the word “cat” is not a cat, but stands for the (class of) cats. Deacon’s explanation of how humans developed this capability is – in my opinion – quite convincing, but is by no means widely accepted. As echoed by McCarthy in his essay, the mystery remains:

“At some point the mind must grammaticize facts and convert them to narratives. The facts of the world do not for the most part come in narrative form. We have to do that.

So what are we saying here? That some unknown thinker sat up one night in his cave and said: Wow. One thing can be another thing. Yes. Of course that’s what we are saying. Except that he didn’t say it because there was no language for him to say it in [yet]….The simple understanding that one thing can be another thing is at the root of all things of our doing. From using colored pebbles for the trading of goats to art and language and on to using symbolic marks to represent pieces of the world too small to see.”

So, how language originated is still an open question. However, once it takes root, it is easily adapted to purposes other than the social imperatives it was invented for. It is a short evolutionary step from rudimentary communication about social norms in hunter-gatherer groups to Shakespeare and Darwin.

Regardless of its origins, however, it seems clear that language is a vehicle for communicating our thinking, but not for thinking or reasoning.

–x–

So, back to LLMs then:

Based, as they are, on a representative corpus of human language, LLMs mimic how humans communicate their thinking, not how humans think. Yes, they can do useful things, even amazing things, but my guess is that these will turn out to have explanations other than intelligence and / or reasoning. For example, in this paper, Ben Prystawksi and his colleagues conclude that “we can expect Chain of Thought reasoning to help when a model is tasked with making inferences that span different topics or concepts that do not co-occur often in its training data, but can be connected through topics or concepts that do.” This is very different from human reasoning which is a) embodied, and thus uses data that is tightly coupled – i.e., relevant to the problem at hand and b) uses the power of abstraction (e.g. theoretical models).

In time, research aimed at understanding the differences between LLM and human reasoning will help clarify how LLMs do what they do. I suspect it will turn out that that LLMs do sophisticated pattern matching and linking at a scale that humans simply cannot and, therefore, give the impression of being able to think or reason.

Of course, it is possible I’ll turn out to be wrong, but while the jury is out, we should avoid conflating communication about thinking with thinking itself.

–x–x–

Postscript:

I asked Bing Image Creator to generate an image for this post. The first prompt I gave it was:

An LLM thinking

It responded with the following image:

I was flummoxed at first, but then I realised it had interpreted LLM as “Master of Laws” degree. Obviously, I hadn’t communicated my thinking clearly, which is kind of ironic given the topic of this article. Anyway, I tried again, with the following prompt:

A Large Language Model thinking

To which it responded with a much more appropriate image: