Making sense of organizational change – a conversation with Neil Preston

In this instalment of my sensemakers series, I chat with Dr. Neil Preston, an Organisational Psychologist based in Perth, about the very topical issue of organizational change. In a wide-ranging conversation, Neil draws interesting connections between myths that are deeply embedded in Western thought and the way we think about and implement change…and also how we could do it so much better.

KA: Hi Neil, thanks for being a guest on my ongoing series of interviews with sensemakers. You and I have corresponded for at least a year now via email, so it’s a real pleasure to finally meet you, albeit virtually. I’d like to kick things off by asking you to say a bit about yourself and your work.

NP: Well, I’m Dr. Neil Preston. I’m an organizational psychologist…what that means is that I’m specially registered in the area of organizational psychology, much like a clinical psychologist. My background professionally is that I originally worked in mental health, as a senior research psychologist. I’ve published 30 to 40 peer-reviewed papers in psychiatry, mental health and psychometrics, so I know my way around empirical psychology. My real love, however, has always been in organizational and industrial psychology, so in 2006 I decided to leave the Health Department of Western Australia and move into full time consulting.

Consulting work has led me mainly into infrastructure projects- these are very large, complex projects where organisations from both the private and public sector have to get together and create alliances in order to get the work done. My job on these projects – as I often put it to people – is to make the Addams Family look like the Brady Bunch [laughter]. The idea is to get different value systems and organizational cultures to align, with the aim of getting to a shared understanding of project goals and a shared commitment to achieving them.

My original approach was very diagnostic – which is the way psychologists are taught their trade – but as problems have become more complex, I’ve had to resort to dialogical (rather than diagnostic) approaches. As you well know, dialogue is more commensurate with complexity than diagnosis, so dialogical approaches are more appropriate for so-called wicked problems. This approach then led me to complex systems theory which in turn led to an area of work that Paul Culmsee, I and yourself are looking into: emergent design practices. (Editor’s note: This refers to a method of problem solving in which solutions are not imposed up front but emerge from dialogue between various stakeholders.)

KA: OK, so could you tell us a bit about the kinds of problems you get called in to tackle?

NP: Very broadly speaking, I’m generally called in when organisations have goals that are incommensurate with each other. For example: a billion dollar road that has to be on time and on budget…but, by the way, the alignment of the road also takes out a nesting site of a Carnaby White Tailed Cockatoo which triggers the environmental biodiversity protection act which in turn triggers issues with local councils and so on.

Complexity in projects often arises from situations like these, where the issue is not just about delivering on time and on budget, but also creating a sustainable habitat and ensuring alignment with local governments etc.

KA: So very broadly, I guess one could say that your work deals with the problems associated with change. The reason I put it in this way is that change is something that most people who work in organisations would have had to deal with – either as executives who initiate the change, managers who are charged with implementing it or employees who are on the receiving end of it. The one thing I’ve noticed through experience –initially as a consultant and then working in big organisations – is that change is formulated and implemented in a very prescriptive way. However, the end results are often less than satisfactory because there are many unintended consequences (loss of morale, drop in productivity etc.) – much like the unintended consequences of large infrastructure projects. I’ve long wondered about this is so: why, after decades of research and experience do we still get it so wrong?

NP: Let me give you an answer from a psychologist’s perspective. There are a couple of sub-disciplines of psychology called depth and archetypal psychology that look at myth. The kind of change management programs that we enact are driven by a (predominantly) Western myth of heroic intervention.

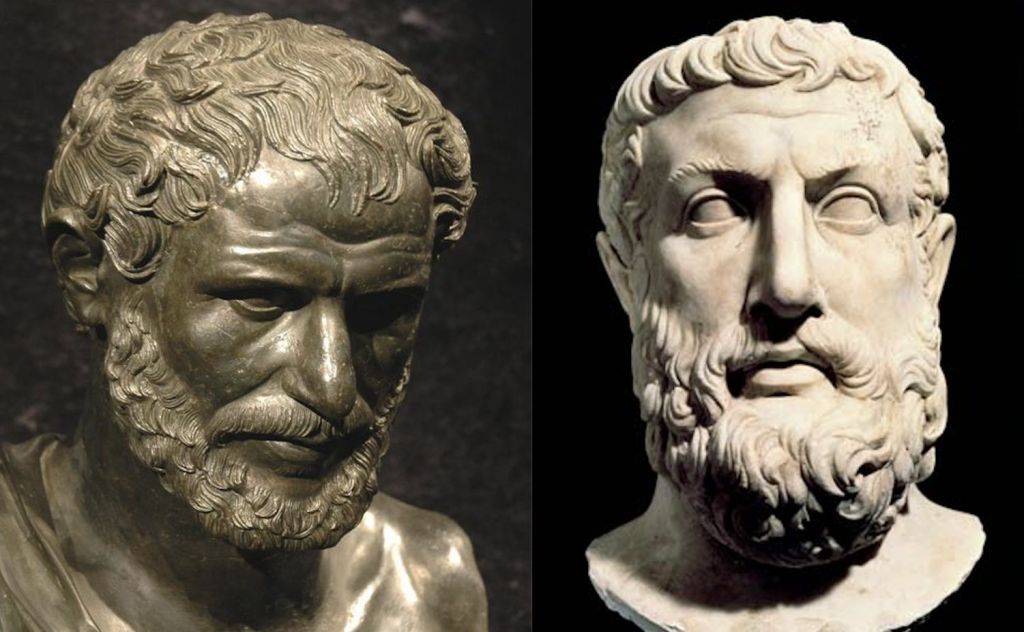

James Hillman, an archetypal psychologist once said that a myth is what is real. This is somewhat contrary to the usual sense in which the word is used because we usually think of a myth as being something that is not real. However, Hillman is right because a myth is really an archetype – an overarching way of seeing the world in a way that we believe to be true. The myth of the hero – the good guy overcoming all adversity to slay the bad guy – is essentially an interventionist one. It is based on the Graeco-Roman notion of the exercise of individual will. Does that make sense so far?

KA: Yeah absolutely. Please go on.

NP: OK, so this myth is dominant in the Western imagination. For example, any movie that a kid might go to see like, say, Star Wars is really about the exercise of the individual will. In much the same way, the paradigm in which your typical change management program operates is very much (individual) action and intervention oriented. Even going back to Homeric times – the Iliad and Odyssey are essentially stories about individuals exercising authority, power…and excellence is another word that crops up often too. The objective of all this of course is to effect dramatic, full-frontal change.

However, there is a problem with this myth, and it is that it assumes that things are not complex. It assumes that simple linear, cause-effect explanations hold – that if you do A then B will happen (if you restructure you will save costs, for example). Such models are convenient because they seem rational on the surface, perhaps because they are easy to understand. However, they overlook the little details that often trip things up. As a result, such change often has unforeseen consequences.

Unfortunately, much of the stuff that comes out of the Big 4 consultancies is based on this myth. The thing to note is that they do it not because it works but because it is in tune with the dominant myth of the Western business world.

KA: What you are saying definitely strikes a chord. What’s strange to me, however, is that there have been people challenging this for quite a while now. You mentioned the predominantly linear approach – A causes B sort of thinking – that change management practitioners tend to adopt. Now, as you well know, systems theorists and cyberneticists have proposed alternate approaches that are more cognizant of the multifaceted nature of change, and they have done so over fifty years ago! What happened to all that? When I read some of the papers, I see that they really speak to the problems we face now, but they seem to have been all but forgotten (Editor’s note: see this post that draws on work by the prominent cyberneticist, Heinz von Foerster, for example). One can’t help but wonder why that is so….

NP: Well that’s because myths are incredibly sticky. We are talking about an ancient myth of the exercise of the individual human will. And, by the way, it’s a very Western thing: I remember once hearing on the radio that the Western notion of the “squeaky wheel getting the grease” has an Eastern counterpart that goes something like, “the loudest goose is first to lose his head.” The point is, the two cultures have a very different way of looking at the world. That myth – the hero myth – is very much brought into the way we tell stories about organisations.

Now, why does that matter? Well, JR Hackman, an organizational psychologist said it quite brilliantly. He called our fixation on the hero myth (in the context of change) the leadership attribution error – he argues that we tend to over-attribute the success of a change process to the salient things that we can see – which is (usually) the leader. As a result we tend to overlook the hidden factors which give rise to the actual performance of the organization. These factors usually relate to the latent conditions present in the organization rather than specific causes like a leader’s actions.

So there are two types of change: planned change and emergent change. Planned change is the way organisations usually think about change. It is a causal view in which certain actions give rise to certain outcomes. But here is the problem: the causal approach focuses primarily on salient features, ignoring all the other things that might be going on.

Now, cybernetics and systems theory do a better job of taking into account features that are hidden. However, as you mentioned, they have not had much uptake. I think the reason for this is that myths are incredibly sticky…that is the best answer I can give.

KA: Hmm that’s interesting…I’d never thought of it that way – the stickiness of myths as blinding us to other viewpoints. Is there something in the nature of human thought or human minds that make us latch on to over-simplified explanations?

NP: Well, there’s this notion of cognitive bias – persistent biases in human perception or judgement (Editors’ Note: also see this post on the role of cognitive bias in project failure). The leadership attribution error is precisely such a bias. I should point out that these biases aren’t necessarily a problem; they just happen to be the way humans think. And there are good evolutionary reasons for the existence of biases: we can’t process every little bit of information that comes to us through our senses, and these biases offer a means to filter out what is unimportant. Unfortunately, sometimes they cause us to overlook what is important. They are heuristics and, like all heuristics, they don’t always work.

So in the case of leadership attribution bias – yes leadership does have an effect, but it is not as much as what people think. In fact, work done by Wageman (who worked with Hackman) shows that what is more important for team performance are the conditions in which the teams work rather than the qualities or abilities of the leader.

KA: From experience I would have to say that rings true: conditions trump causes any day as far as team performance is concerned.

NP: Yeah and there’s a good reason for it; and it is so simple that we often overlook it. Take the example of sending a rocket to the moon. If you set up the right conditions for the rocket – the right amount of fuel, the right load and so forth, then everything that is necessary for the performance of the rocket is already set up. The person who actually steers the rocket is not as critical to the performance as the conditions are. And the conditions are already present when the rocket is in flight.

Similarly, In the case of organizational change, we should not be looking for causes – be it leadership or planned actions or whatever– but the conditions that might give rise to emergent change.

KA: Yeah, but conditions are causes too, aren’t they.

NP: Yes they are, but the point is that they aren’t salient ones – that is, they aren’t immediately obvious. Moreover, and this is the important point: you do not know the exact outcomes of those causes except that they will in general be positive if the conditions are right and negative if they aren’t.

KA: That makes sense. Now I’d like to ask you about a related matter. When dealing with change or anything else, organisations invariably seem to operate at the limits of their capacity. Leaders always talk about “pushing ourselves” or “pushing the envelope” and so on. On the other hand, there’s also a great deal of talk about flexibility and the capacity for change, but we never seem to build this into our organisations. Is there a way one can do this?

NP: Yes, you can actually build in resilience. Organisations generally like to keep their systems and processes tightly coupled – that is, highly dependent on each other. This tends to make them fragile or prone to breakdown. So, one of the things organisations can do to build resilience is to keep systems and processes loosely coupled. (Editor’s note: for example, devolve decision-making authority to the lowest possible level in the organization. This increases flexibility and responsiveness while having the added benefit of reducing management overhead).

Conditions also play a role here. One of the things that organisations like to talk about is innovation. The point is you can’t put in place processes for innovation but you can create conditions that might foster it. You can’t ask people whether they “did their 15 minutes of innovation today” but you can give them the discretionary freedom to do things that have nothing to do with their work…and they just might do something that goes above and beyond their regular jobs. But of course what underpins all this is trust. Without trust you simply cannot build in flexibility or resilience.

KA: This really strikes a chord and let me tell you why. I read Taleb’s book a while ago. As you probably know, the book is about antifragility, which he defines as the ability to benefit from uncertainty rather than just being resilient to it. After I read the book I wrote a post on what an antifragile IT strategy might look like…and in an uncanny resonance with what you just said, I made the claim that trust would be the single important element of the strategy [laughter].

NP: Yeah, and trust is not something you receive as much as you give. So as a psychologist I know why it is so damaging to people. You know, “Et tu Brutus” – Caesar’s famous line – it was the betrayal of trust that was so damaging. Once trust is gone there’s nothing left.

KA: Indeed, I sometimes feel that the key job of a manager is to develop trust-based relationships with his or her peers and subordinates. However, what I see in the workplace is often (though definitely not always) the opposite: people simply do not trust their managers because managers are quick to pass the blame down (or even across) the hierarchy rather than absorbing it…which arguably, and ethically, is their job. They should be taking the heat so that people can get on with actual work. Unfortunately managers who do this are not as common as they should be.

NP: We’re getting into a complex area here, and it is one that I deal with at length in my masterclass on collaborative maturity and leadership. This is the old scapegoating mechanism at work, and it is related to the leadership attribution error and the hero myth. If the attribution is back to the individual, then the blame must also be attributable to an individual. In fact, I have this slide in one of my presentations that goes, “a scapegoat is almost as useful as the solution to a problem.” [laughter]

Now, there are two questions here. “The scapegoat” is the answer to the question “Who is responsible?” However, it is more important to look at conditions rather than causes, so the real question is, “How did this situation come about?” When you look at “Who” questions, you are immediately going into questions of character. It elicits responses like “Yeah, it’s Kailash’s fault because he is that kind of a guy…he is an INTP or whatever.” What’s happening here is that the problem is explained away because it is attributed to Kailash’s character. You see what is going on…and why it is so dangerous?

KA: Yeah, that’s really interesting.

NP: And you see, then they’ll say something like, “…so let’s take Kailash out and put Neil in”…but the point is that if the conditions remain the same, Neil will fall down the same hole.

KA: It’s interesting the way you tie both things back to the individual – the individual as hero and the individual as scapegoat.

NP: Yes, it’s two sides of the same coin. Followership acquiesces to leadership: Kailash will follow Neil, say, to the Promised Land. If we get there, Neil gets the credit but if we don’t, he gets the blame.

KA: Very interesting, but this brings up another question. Managers and leaders might turn around and say, “It’s all very well to criticize the way we operate, but the fact is that it is impossible to involve all stakeholders in determining, say, a strategy. So in a sense, we are forced to take on the role of “heroes,” as you put it.”

So my question is: are there some ways in which org are some of the ways in which organisations can address the difficulties associated with of collective decision-making?

NP: Of course, it is often impossible to include all stakeholders in a decision-making process, particularly around matters such as organisational strategy. What you have to do first is figure out who needs to be involved so that all interests are fairly represented. Second, I’m attracted to the whole idea of divergent (open-ended) and convergent (decisive) thinking. For example, if a problem is wicked or complex, there is no point attempting to use expert knowledge or analysis exclusively (Editor’s note: because no single expert holds the answers and there isn’t enough information for a sensible, unbiased analysis). Instead, one has to use collective intelligence or the wisdom of the crowd by seeking opinions from all groups of stakeholders who have a stake in the problem. This is divergent thinking.

However, there comes a time when one has to “make an incision in reality” – i.e. stop consultation and make the best possible decision based on data and ethics. – one has to use both IQ and EQ. This is the convergent side of the coin.

Another problem is that one often has the data one needs to make the right decision, but the decision does not get made for reasons of ideology. Then it becomes a question of power rather than collective intelligence: a solution is imposed rather than allowed to emerge.

KA: Well that happens often enough – this “short-circuiting” of the decision-making process by those in positions of power.

NP: Yes, and it is why I think deliberative decision-making which comes from the Western notion of deliberative democracy – i.e. decision-making based on dialogue and consultation is the best way forward but it can be a challenge to implement. Democracy is slow, but it is generally more accurate…

KA: Yes, that’s true, but it can also meander.

NP: Sure, everything is bound by certain limitations (like time) and that’s why you have to know when to intervene. One of the important things for a leader to have in this connection is negative capability – which is not “negative” in the usual sense of the word, but rather the ability to know how to be comfortable with ambiguity and be able to intervene in ambiguous situations in a way that gets some kind of useful outcome.

Of course, acting in such situations also means that one has to have good feedback mechanisms in place; one must know how things are actually working on the ground so that one can take corrective actions if needed. But, in the end, the success of this way of working depends critically on having the right conditions in place. If you don’t set up the right conditions, any intervention can have catastrophic consequences.

If I may talk politically for a minute – the current situation in the Middle East is a classic example of a planned intervention: direct, frontal, dramatic, causal, linear and supposedly rational. However, if the right conditions are not in place, such interventions can have unforeseen consequences that completely overshadow the alleged benefits. And that is exactly what we have seen.

In general I would say that emergent change is more likely to succeed than large-scale, direct, planned change. The example one hears all the time is that of continuous improvement – where small changes are put in place and then adjusted based on feedback on how they are working.

KA: This is a matter of some frustration for me: in general people will agree that collaboration and collective decision-making are good, but when the time comes, they revert to their old, top-down ways of working.

NP: Yes, well when I go into a consulting engagement on collaborative maturity, one of the first things I ask people is whether they want to use the collaborative process to inform people or to influence them. Often I find that they only want to use it to inform people. There is a big difference between the two: influencing is emergent, informing isn’t.

KA: This begs a question: say you walk into an organization where people say that they want to use collaborative processes to influence rather than inform, but you see that the culture is all wrong and it isn’t going to work. Do you actually tell them, “hey, this is not going to work in your organization?”

NP: Well if people don’t feel safe to speak their truth then it isn’t going to work. That’s why I’m so interested in Hackman’s work on conditions over causes. Coming to your question I don’t necessarily tell people that it’s not going to work because I believe it is more productive to invite them to explore the implications of doing things in a certain way. That way, they get to see for themselves how some of the things they are doing might actually be improved. One doesn’t preach but one hands things back to them.

In psychology there are these terms, transference and countertransference. In this context transference would be where a consultant thinks, “I’m a consultant so I’m going to assume a consultant persona by acting and behaving like I have all the answers”, and countertransference would be where the client reinforces this by saying something like, “you are the expert and you have all the answers.” Handing back stops this transference-countertransference cycle. So what we do is to get people to explore the consequences of their actions and thus see things that might have been hidden from their view. It is not to say “I told you so,” but rather “what are the implications of going down this path.” The idea is to appeal to the ethical or good side in human beings…and I believe that human beings are fundamentally good rather than not.

KA: I like your use of the word “ethical” here. I think that is really important and is what is often missing. One hears a lot about ethics in business these days, but it is most often taught and talked about in a very superficial way. The reality, however, is that the resolution of most wicked problems involves ethical considerations rather than logic and rationality…and this is something that many people do not understand. It isn’t about doing things right, rather it is about doing the right things.

NP: Yes, and this is related to what I call “meaning over motivation” – the idea being is that instead of attempting to motivate people to do something, try providing them with meaning. When you do this you will often find that change comes for free. And it is worth noting that meaning has both an emotional and rational component – or, put a little bit differently, an ethical and logical one. In one of his books, Daniel Pink makes the point that uncoupling ethics from profit can have catastrophic consequences…and we have good examples of that in recent history.

The broad lesson here is that if the conditions aren’t right then it is inevitable that unethical behavior will dominate.

KA: Yeah well human nature will ensure that won’t it?

NP: [laughs] Yeah, and you don’t need a psychologist to tell you that.

KA: [laughs] Indeed…and I think that would be a good note on which to bring this conversation to a close. Neil, thanks so much for your time and insights. It’s been a pleasure to chat with you and I look forward to catching up with you again…hopefully in person, in the not too distant future.

NP: Yeah, Singapore and Perth are not that far apart…

Ironies of enterprise information technology

Introduction

On one of my random walks through Google Scholar, I stumbled on an interesting paper entitled, Ironies of Automation. The main message of the paper is nicely summarized in its first few lines:

The classic aim of automation is to replace human manual control, planning and problem solving by automatic devices and computers. However… even highly automated systems, such as electric power networks, need human beings for supervision, adjustment, maintenance, expansion and improvement. Therefore one can draw the paradoxical conclusion that automated systems still are man-machine systems, for which both technical and human factors are important. This paper suggests that the increased interest in human factors among engineers reflects the irony that the more advanced a control system is, so the more crucial may be the contribution of the human operator.

These lines were written over thirty years ago, but are ever more apt today – such paradoxes are rife, not only in automation, but in any field in which technology plays an important part. To illustrate my point, I highlight a couple of ironies drawn from a domain that is likely to be familiar to many readers of this blog: the world of enterprise IT. I also present a brief discussion of how these ironies of enterprise IT can be avoided.

Ironies of enterprise IT

In the last few decades information technology has found its way into diverse organisational functions. This trend has been accompanied by an explosive growth in new technologies. As a result of this, corporate IT infrastructures have become ever more complex and the costs of maintaining them have burgeoned. Quite naturally, the focus has thus turned to taming both complexity and cost. The favoured approaches to tackling this problem are standardisation and/or outsourcing. However, as I discuss below, both often lead to ironic outcomes.

An irony of standardisation

Enterprise IT environments tend to evolve rapidly, reflecting the many demands made on them by the organisational functions they support. This is good because it means that IT is doing what it should be doing: supporting the work of the parent organisation. On the other hand, this can result in unwieldy environments that are difficult (not to mention, expensive) to maintain. One way to address this is to impose of standards relating to processes (such as ITIL) and infrastructure (such as SAP or any enterprise application).

The question is, how well does such standardisation work in practice?

In his book entitled, From Control to Drift, Claudio Ciborra pointed out that IT infrastuctures in organisations tend to drift – i.e. they escape processes, plans and standards, and take on a life of their own. The reason they drift is that they are subject to unpredictable forces within and outside the hosting organisation. The imposition of standards may slow the drift but cannot arrest it entirely. Infrastructures are therefore best seen as ever-evolving constructs consisting of systems, people and processes that interact with each other in often unforeseen ways. As he put it:

Corporate information infrastructures are puzzles, or better collages, and so are the design and implementation processes that lead to their construction and operation. They are embedded in larger, contextual puzzles and collages. Interdependence, intricacy, and interweaving of people, systems, and processes are the culture bed of infrastructure. Patching, alignment of heterogeneous actors and making do are the most frequent approaches…irrespective of whether management [is] planning or strategy oriented, or inclined to react to contingencies.

The essential message here is that standards and processes overlook the fact that enterprises are complex social systems that are subject to internal and external influences which cannot always be foreseen. Dealing with these, more often than not, entails the implementation of hacks and workarounds that violate the imposed standards and thus nullify the benefits of standardisation.

In summary, “standardised” IT environments often end up have a plethora of non-standard hacks and workarounds that are necessary, but are generally messy and expensive to maintain.

An irony of outsourcing

One of the main reasons for outsourcing IT is to reduce costs. Yes, I am aware that many decision-makers claim that their primary reason is to reduce complexity rather than cost, but the choices they make often belie their claims. The irony is that in their eagerness to control costs, they often end up increasing them because they overlook hidden factors. I explain this in brief below, drawing on my post on the transaction costs of outsourcing.

The basic idea is simple – it is that the upfront fee quoted by the vendor is but a fraction of the total cost that will be incurred by the customer. Some of the costs that are generally not included in upfront costs are:

- Search /selection costs: these are the costs associated with searching for and shortlisting vendors.

- Bargaining costs: these are costs associated with negotiations for a mutually acceptable contract.

- Costs of coordinating work: these are costs associated with coordinating external and internal work. This is particularly important in the case of software-as-a-service because the effort required to interface cloud applications with in-house systems is often underestimated.

- Costs of enforcement and change: These are costs associated with enforcing the terms of the contract and those associated with change.

The point to note is these costs are rarely if ever mentioned by the vendor, but almost always show up in one form or another. It is therefore important for the customer to try and get a handle on these before entering into any commercial agreements. The problem is, some of these costs (particularly 3 and 4 above) are hard if not impossible to figure out upfront. For example, if the relationship turns sour the only solution might be to switch vendors. The cost associated with this is often significant and is borne entirely by the customer. A lack of awareness of such costs associated with outsourcing will invariably result in ironical outcomes.

In summary: attempts to control costs by outsourcing IT can have the contrary effect of increasing them.

Avoiding ironical outcomes

So how does one avoid ironical outcomes?

I have only one piece of advice to offer here: when planning IT architectures or outsourcing initiatives, use an incremental or emergent approach that avoids big designs or commitments upfront. Using an emergent approach not only limits risk, it also provides opportunities for learning. Most important, it enables one to verify that the envisaged benefits are not just wishful architect or manager-level thinking

Below I outline what such an approach might entail for the two ironies discussed earlier:

- For infrastructures/systems: avoid grandiose system designs that attempt to span the “enterprise” – remember that one size will not fit all of your users. . Consequently, enterprise architectures and governance systems should provide guidelines, rather than detailed prescriptions. As Anders Jensen-Waud puts it in this post: they should foster resilience and adaptability rather than conformance,

- For outsourcing: start small, possibly with a small project or system. This will help you get a sense for how outsourcing would work in your environment and help you figure out whether the vendor you have selected is really right for you. Remember, no two environments are identical so others’ lessons learned may be considerably less useful than you think. Finally, if you’re going to the cloud, be sure to factor in costs and technical challenges associated with interfacing external apps with in-house ones.

Yes, there’s nothing particularly profound here, it is just common sense…but you know what they say about the commonality of common sense.

Conclusion

In this post I have highlighted some ironies of enterprise information systems and have briefly outlined an emergent approach to avoiding them. I believe but cannot prove that ironical outcomes are almost guaranteed if one takes a monolithic, enterprise-style approach or a let’s-outsource-it-all attitude to enterprise information technology. Such a view overlooks the messy little details and differences that trip up big designs and grandiose plans. In the end, the only way to avoid ironical outcomes is to start small, learn from experience and incorporate that learning in an incremental manner in whatever you’re building or doing. Yes, you might end up with something you did not envisage at the start, but you will have learnt much along the way. More important, perhaps, is that you will be able to rest assured that it works.