Posts Tagged ‘Machine Learning’

On the physical origins of modern AI – an explainer on the 2024 Nobel for physics

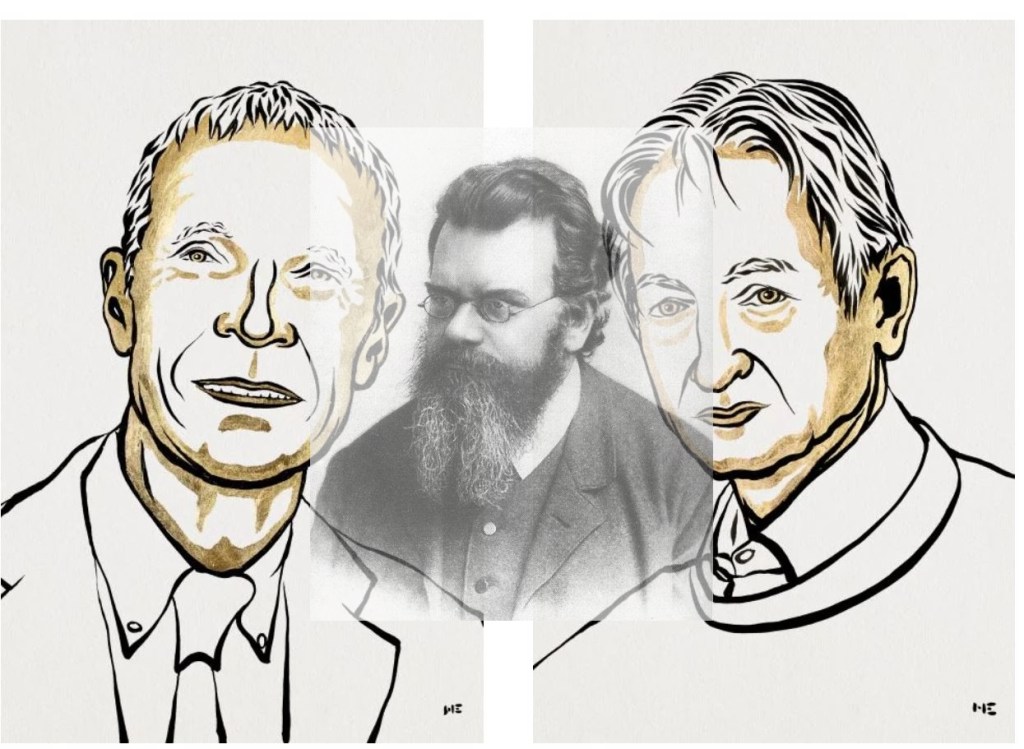

My first reaction when I heard that John Hopfield and Geoffrey Hinton had been awarded the Physics Nobel was – “aren’t there any advances in physics that are, perhaps, more deserving?” However, after a bit of reflection, I realised that this was a canny move on part of the Swedish Academy because it draws attention to the fact that some of the inspiration for the advances in modern AI came from physics. My aim in this post is to illustrate this point by discussing (at a largely non-technical level) two early papers by the laureates which are referred to in the Nobel press release.

The first paper is “Neural networks and physical systems with emergent collective computational abilities“, published by John Hopfield in 1982 and the second is entitled, “A Learning Algorithm for Boltzmann Machines,” published by David Ackley, Geoffrey Hinton and Terrence Sejnowski in 1985.

–x–

Some context to begin with: in the early 1980s, new evidence on the brain architecture had led to a revived interest in neural networks, which were first proposed by Warren McCulloch and Walter Pitts in this paper in the early 1940s! Specifically, researchers realised that, like brains, neural networks could potentially be used to encode knowledge via weights in connections between neurons. The search was on for models / algorithms that demonstrated this. The papers by Hopfield and Hinton described models which could encode patterns that could be recalled, even when prompted with imperfect or incomplete examples.

Before describing the papers, a few words on their connection to physics:

Both papers draw on the broad field of Statistical Mechanics, a branch of physics that attempts to derive macroscopic properties of objects such as temperature and pressure from the motion of particles (atoms, molecules) that constitute them.

The essence of statistical mechanics lies in the empirically observed fact that large collections of molecules, when subjected to the same external conditions, tend to display the same macroscopic properties. This, despite the fact the individual molecules are moving about at random speeds and in random directions. James Clerk Maxwell and Ludwig Boltzmann derived the mathematical equation that describes the fraction of particles that have a given speed for the special case of an ideal gas – i.e., a gas in which there are no forces between molecules. The equation can be used to derive macroscopic properties such as the total energy, pressure etc.

Other familiar macroscopic properties such as magnetism or the vortex patterns in fluid flow can (in principle) also be derived from microscopic considerations. The key point here is that the collective properties (such as pressure or magnetism) are insensitive to microscopic details of what is going in the system.

With that said about the physics connection, let’s move on to the papers.

–x–

In the introduction to his paper Hopfield poses the following question:

“…physical systems made from a large number of simple elements, interactions among large numbers of elementary components yield collective phenomena such as the stable magnetic orientations and domains in a magnetic system or the vortex patterns in fluid flow. Do analogous collective phenomena in a system of simple interacting neurons have useful “computational” correlates?”

The inspired analogy between molecules and neurons led him to propose the mathematical model behind what are now called Hopfield Networks (which are cited in the Nobel press release). Analogous to the insensitivity of the macroscopic properties of gases to the microscopic dynamics of molecules, he demonstrated that a neural network in which each neuron has simple properties can display stable collective computational properties. In particular the network model he constructed displayed persistent “memories” that could be reconstructed from smaller parts of the network. As Hopfield noted in the conclusion to the paper:

“In the model network each “neuron” has elementary properties, and the network has little structure. Nonetheless, collective computational properties spontaneously arose. Memories are retained as stable entities or Gestalts and can be correctly recalled from any reasonably sized subpart. Ambiguities are resolved on a statistical basis. Some capacity for generalization is present, and time ordering of memories can also be encoded.”

–x–

Hinton’s paper, which followed Hopfield’s by a couple of years, describes a network “that is capable of learning the underlying constraints that characterize a domain simply by being shown examples from the domain.” Their network model, which they christened “Boltzmann Machine” for reasons I’ll explain shortly, could (much like Hopfield’s model) reconstruct a pattern from a part thereof. In their words:

“The network modifies the strengths of its connections so as to construct an internal generative model that produces examples with the same probability distribution as the examples it is shown. Then, when shown any particular example, the network can “interpret” it by finding values of the variables in the internal model that would generate the example. When shown a partial example, the network can complete it by finding internal variable values that generate the partial example and using them to generate the remainder.”

They developed a learning algorithm that could encode the (constraints implicit in a) pattern via the connection strengths between neurons in the network. The algorithm worked but converged very slowly. This limitation is what led Hinton to later invent Restricted Boltzmann Machines, but that’s another story.

Ok, so why the reference to Boltzmann? The algorithm (both the original and restricted versions) uses the well-known gradient descent method to minimise an objective function, which in this case is an expression for energy. Students of calculus will know that gradient descent is prone to getting stuck in local minima. The usual way to get out of these minima is to take occasional random jumps to higher values of the objective function. The decision rule Hinton and Co use to determine whether or not to make the jump is such that the overall probabilities of the two states converges to a Boltzmann distribution (different from, but related to, the Maxwell-Boltzmann distribution mentioned earlier). It turns out that the Boltzmann distribution is central to statistical mechanics, but it would take me too far afield to go into that here.

–x–

Hopfield networks and Boltzmann machines may seem far removed from today’s neural networks. However, it is important to keep in mind that they heralded a renaissance in connectionist approaches to AI after a relatively lean period in the 1960s and 70s. Moreover, vestiges of the ideas implemented in these pioneering models can be seen in some popular architectures today ranging from autoencoders to recurrent neural networks (RNNs).

That’s a good note to wrap up on, but a few points before I sign off:

Firstly, a somewhat trivial matter: it is simply not true that physics Nobels have not been awarded for applied work in the past. A couple of cases in point are the 1909 prize awarded to Marconi and Braun for the invention of wireless telegraphy, and the 1956 prize to Shockley, Bardeen and Brattain for the discovery of the transistor effect, the basis of the chip technology that powers AI today.

Secondly: the Nobel committee, contrary to showing symptoms of “AI envy”, are actually drawing public attention to the fact that the practice of science, at its best, does not pay heed to the artificial disciplinary boundaries that puny minds often impose on it.

Finally, a much broader and important point: inspiration for advances in science often come from finding analogies between unrelated phenomena. Hopfield and Hinton’s works are not rare cases. Indeed, the history of science is rife with examples of analogical reasoning that led to paradigm-defining advances.

–x–x–

A prelude to machine learning

What is machine learning?

The term machine learning gets a lot of airtime in the popular and trade press these days. As I started writing this article, I did a quick search for recent news headlines that contained this term. Here are the top three results with datelines within three days of the search:

The truth about hype usually tends to be quite prosaic and so it is in this case. Machine learning, as Professor Yaser Abu-Mostafa puts it, is simply about “learning from data.” And although the professor is referring to computers, this is so for humans too – we learn through patterns discerned from sensory data. As he states in the first few lines of his wonderful (but mathematically demanding!) book entitled, Learning From Data:

If you show a picture to a three-year-old and ask if there’s a tree in it, you will likely get a correct answer. If you ask a thirty year old what the definition of a tree is, you will likely get an inconclusive answer. We didn’t learn what a tree is by studying a [model] of what trees [are]. We learned by looking at trees. In other words, we learned from data.

In other words, the three year old forms a model of what constitutes a tree through a process of discerning a common pattern between all objects that grown-ups around her label “trees.” (the data). She can then “predict” that something is (or is not) a tree by applying this model to new instances presented to her.

This is exactly what happens in machine learning: the computer (or more correctly, the algorithm) builds a predictive model of a variable (like “treeness”) based on patterns it discerns in data. The model can then be applied to predict the value of the variable (e.g. is it a tree or not) in new instances.

With that said for an introduction, it is worth contrasting this machine-driven process of model building with the traditional approach of building mathematical models to predict phenomena as in, say, physics and engineering.

What are models good for?

Physicists and engineers model phenomena using physical laws and mathematics. The aim of such modelling is both to understand and predict natural phenomena. For example, a physical law such as Newton’s Law of Gravitation is itself a model – it helps us understand how gravity works and make predictions about (say) where Mars is going to be six months from now. Indeed, all theories and laws of physics are but models that have wide applicability.

(Aside: Models are typically expressed via differential equations. Most differential equations are hard to solve analytically (or exactly), so scientists use computers to solve them numerically. It is important to note that in this case computers are used as calculation tools, they play no role in model-building.)

As mentioned earlier, the role of models in the sciences is twofold – understanding and prediction. In contrast, in machine learning the focus is usually on prediction rather than understanding. The predictive successes of machine learning have led certain commentators to claim that scientific theory building is obsolete and science can advance by crunching data alone. Such claims are overblown, not to mention, hubristic, for although a data scientist may be able to predict with accuracy, he or she may not be able to tell you why a particular prediction is obtained. This lack of understanding can mislead and can even have harmful consequences, a point that’s worth unpacking in some detail…

Assumptions, assumptions

A model of a real world process or phenomenon is necessarily a simplification. This is essentially because it is impossible to isolate a process or phenomenon from the rest of the world. As a consequence it is impossible to know for certain that the model one has built has incorporated all the interactions that influence the process / phenomenon of interest. It is quite possible that potentially important variables have been overlooked.

The selection of variables that go into a model is based on assumptions. In the case of model building in physics, these assumptions are made upfront and are thus clear to anybody who takes the trouble to read the underlying theory. In machine learning, however, the assumptions are harder to see because they are implicit in the data and the algorithm. This can be a problem when data is biased or an algorithm opaque.

Problem of bias and opacity become more acute as datasets increase in size and algorithms become more complex, especially when applied to social issues that have serious human consequences. I won’t go into this here, but for examples the interested reader may want to have a look at Cathy O’Neil’s book, Weapons of Math Destruction, or my article on the dark side of data science.

As an aside, I should point out that although assumptions are usually obvious in traditional modelling, they are often overlooked out of sheer laziness or, more charitably, lack of awareness. This can have disastrous consequences. The global financial crisis of 2008 can – to some extent – be blamed on the failure of trading professionals to understand assumptions behind the model that was used to calculate the value of collateralised debt obligations.

It all starts with a straight line….

Now that we’ve taken a tour of some of the key differences between model building in the old and new worlds, we are all set to start talking about machine learning proper.

I should begin by admitting that I overstated the point about opacity: there are some machine learning algorithms that are transparent as can possibly be. Indeed, chances are you know the algorithm I’m going to discuss next, either from an introductory statistics course in university or from plotting relationships between two variables in your favourite spreadsheet. Yea, you may have guessed that I’m referring to linear regression.

In its simplest avatar, linear regression attempts to fit a straight line to a set of data points in two dimensions. The two dimensions correspond to a dependent variable (traditionally denoted by ) and an independent variable (traditionally denoted by

). An example of such a fitted line is shown in Figure 1. Once such a line is obtained, one can “predict” the value of the dependent variable for any value of the independent variable. In terms of our earlier discussion, the line is the model.

Figure 1 also serves to illustrate that linear models are going to be inappropriate in most real world situations (the straight line does not fit the data well). But it is not so hard to devise methods to fit more complicated functions.

The important point here is that since machine learning is about finding functions that accurately predict dependent variables for as yet unknown values of the independent variables, most algorithms make explicit or implicit choices about the form of these functions.

Complexity versus simplicity

At first sight it seems a no-brainer that complicated functions will work better than simple ones. After all, if we choose a nonlinear function with lots of parameters, we should be able to fit a complex data set better than a linear function can (See Figure 2 – the complicated function fits the datapoints better than the straight line). But there’s catch: although the ability to fit a dataset increases with the flexibility of the fitting function, increasing complexity beyond a point will invariably reduce predictive power. Put another way, a complex enough function may fit the known data points perfectly but, as a consequence, will inevitably perform poorly on unknown data. This is an important point so let’s look at it in greater detail.

Recall that the aim of machine learning is to predict values of the dependent variable for as yet unknown values of the independent variable(s). Given a finite (and usually, very limited) dataset, how do we build a model that we can have some confidence in? The usual strategy is to partition the dataset into two subsets, one containing 60 to 80% of the data (called the training set) and the other containing the remainder (called the test set). The model is then built – i.e. an appropriate function fitted – using the training data and verified against the test data. The verification process consists of comparing the predicted values of the dependent variable with the known values for the test set.

Now, it should be intuitively clear that the more complicated the function, the better it will fit the training data.

Question: Why?

Answer: Because complicated functions have more free parameters – for example, linear functions of a single (dependent) variable have two parameters (slope and intercept), quadratics have three, cubics four and so on. The mathematician, John von Neumann is believed to have said, “With four parameters I can fit an elephant, and with five I can make him wiggle his trunk.” See this post for a nice demonstration of the literal truth of his words.

Put another way, complex functions are wrigglier than simple ones, and – by suitable adjustment of parameters – their “wriggliness” can be adjusted to fit the training data better than functions that are less wriggly. Figure 2 illustrates this point well.

This may sound like you can have your cake and eat it too: choose a complicated enough function and you can fit both the training and test data well. Not so! Keep in mind that the resulting model (fitted function) is built using the training set alone, so a good fit to the test data is not guaranteed. In fact, it is intuitively clear that a function that fits the training data perfectly (as in Figure 2) is likely to do a terrible job on the test data.

Question: Why?

Answer: Remember, as far as the model is concerned, the test data is unknown. Hence, the greater the wriggliness in the trained model, the less likely it is to fit the test data well. Remember, once the model is fitted to the training data, you have no freedom to tweak parameters any further.

This tension between simplicity and complexity of models is one of the key principles of machine learning and is called the bias-variance tradeoff. Bias here refers to lack of flexibility and variance, the reducible error. In general simpler functions have greater bias and lower variance and complex functions, the opposite. Much of the subtlety of machine learning lies in developing an understanding of how to arrive at the right level of complexity for the problem at hand – that is, how to tweak parameters so that the resulting function fits the training data just well enough so as to generalise well to unknown data.

Note: those who are curious to learn more about the bias-variance tradeoff may want to have a look at this piece. For details on how to achieve an optimal tradeoff, search for articles on regularization in machine learning.

Unlocking unstructured data

The discussion thus far has focused primarily on quantitative or enumerable data (numbers and categories) that’s stored in a structured format – i.e. as columns and rows in a spreadsheet or database table). This is fine as it goes, but the fact is that much of the data in organisations is unstructured, the most common examples being text documents and audio-visual media. This data is virtually impossible to analyse computationally using relational database technologies (such as SQL) that are commonly used by organisations.

The situation has changed dramatically in the last decade or so. Text analysis techniques that once required expensive software and high-end computers have now been implemented in open source languages such as Python and R, and can be run on personal computers. For problems that require computing power and memory beyond that, cloud technologies make it possible to do so cheaply. In my opinion, the ability to analyse textual data is the most important advance in data technologies in the last decade or so. It unlocks a world of possibilities for the curious data analyst. Just think, all those comment fields in your survey data can now be analysed in a way that was never possible in the relational world!

There is a general impression that text analysis is hard. Although some of the advanced techniques can take a little time to wrap one’s head around, the basics are simple enough. Yea, I really mean that – for proof, check out my tutorial on the topic.

Wrapping up

I could go on for a while. Indeed, I was planning to delve into a few algorithms of increasing complexity (from regression to trees and forests to neural nets) and then close with a brief peek at some of the more recent headline-grabbing developments like deep learning. However, I realised that such an exploration would be too long and (perhaps more importantly) defeat the main intent of this piece which is to give starting students an idea of what machine learning is about, and how it differs from preexisting techniques of data analysis. I hope I have succeeded, at least partially, in achieving that aim.

For those who are interested in learning more about machine learning algorithms, I can suggest having a look at my “Gentle Introduction to Data Science using R” series of articles. Start with the one on text analysis (link in last line of previous section) and then move on to clustering, topic modelling, naive Bayes, decision trees, random forests and support vector machines. I’m slowly adding to the list as I find the time, so please do check back again from time to time.

Note: This post is written as an introduction to the Data, Algorithms and Meaning subject that is part of the core curriculum of the Master of Data Science and Innovation program at UTS. I’m co-teaching the subject in Autumn 2018 with Alex Scriven and Rory Angus.